Deepfakes are no longer something new but an internet-wide problem these days!

Since their first appearance on the internet, the “deeply fake” deepfakes have come a long way, evolving from just some manipulated videos to a major threat!

And guess what! They are not going to stop anytime soon!

From casting Nicholas Cage as Superman’s lady love Lois Lane to inserting famous Hollywood actresses Scarlett Johansson and Gal Gadot in porn movies, AI-enabled deepfake videos have become a serious concern.

And now, the technology used to make deepfake videos is being used as a solution to end the horrors of deepfake and the catastrophic consequences it may cause!

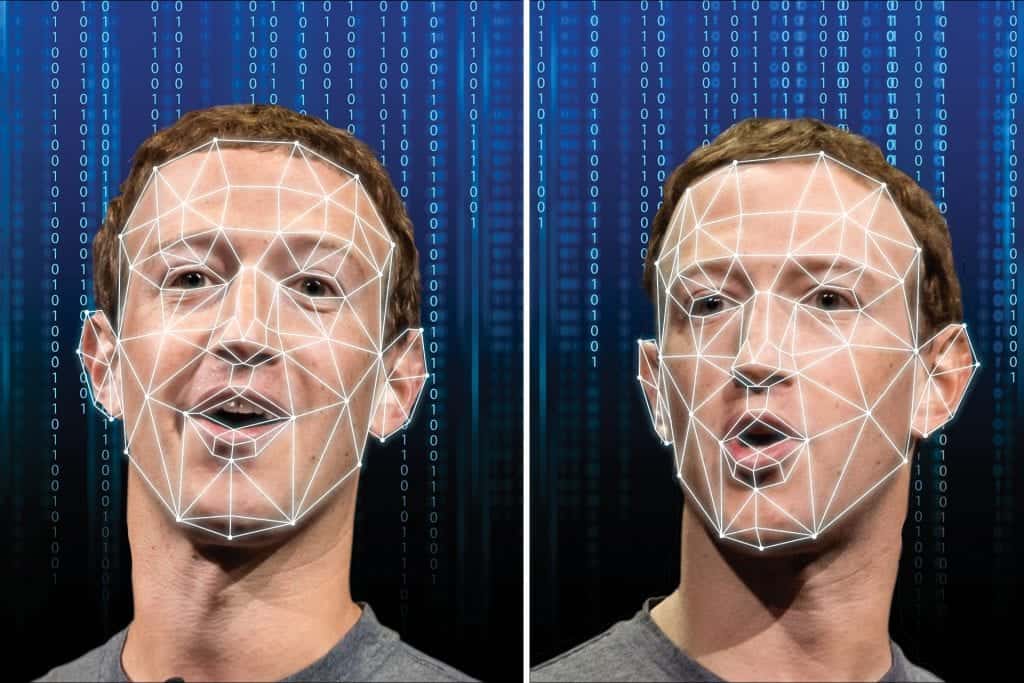

Deepfakes are simply fake or falsified videos. They are basically synthetic media where a person in an existing video or image is replaced or swapped with someone else’s likeness. Often referred to as face-stitching technology, it lets people digitally insert someone in video scenes they were never in.

The term deepfake was coined from ‘Deep Learning’ and ‘Fake’ because deepfakes are created by exploiting deep learning technology. Deep learning technology is a subset of AI, where massive data sets get replaced by the neural net simulation to create a fake.

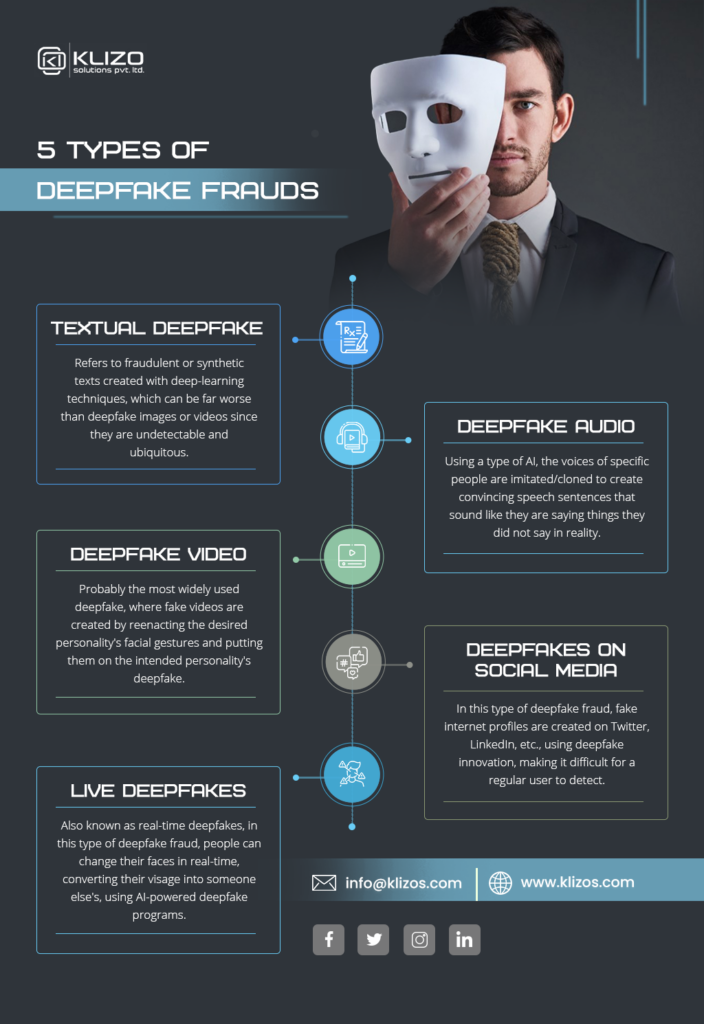

Initially, deepfake technology was used to create fake videos only. But over the years, different types of it have emerged, such as textual deepfakes, deepfake audio, live deepfakes, etc.

Or did you see the video of Facebook founder Mark Zuckerberg where he reveals how the social networking platform ‘owns’ its users and uses the data to manipulate them? Was it not deeply convincing?

Well, none of these videos are original. They are the deeply fake ‘deepfake’ videos.

The deepfake or the face-stitching technology lets people digitally insert someone in scenes they were never really in. From casting Nicholas Cage as Superman’s lady love Lois Lane to making Obama say things he would never say or even including famous Hollywood actresses Scarlett Johansson and Gal Gadot in porn movies, AI-enabled deepfake videos have become a serious concern.

And now the very technology that has been used to make deepfake videos, have been tried as a solution to end the horrors of deepfake and the catastrophic consequences it might lead to.

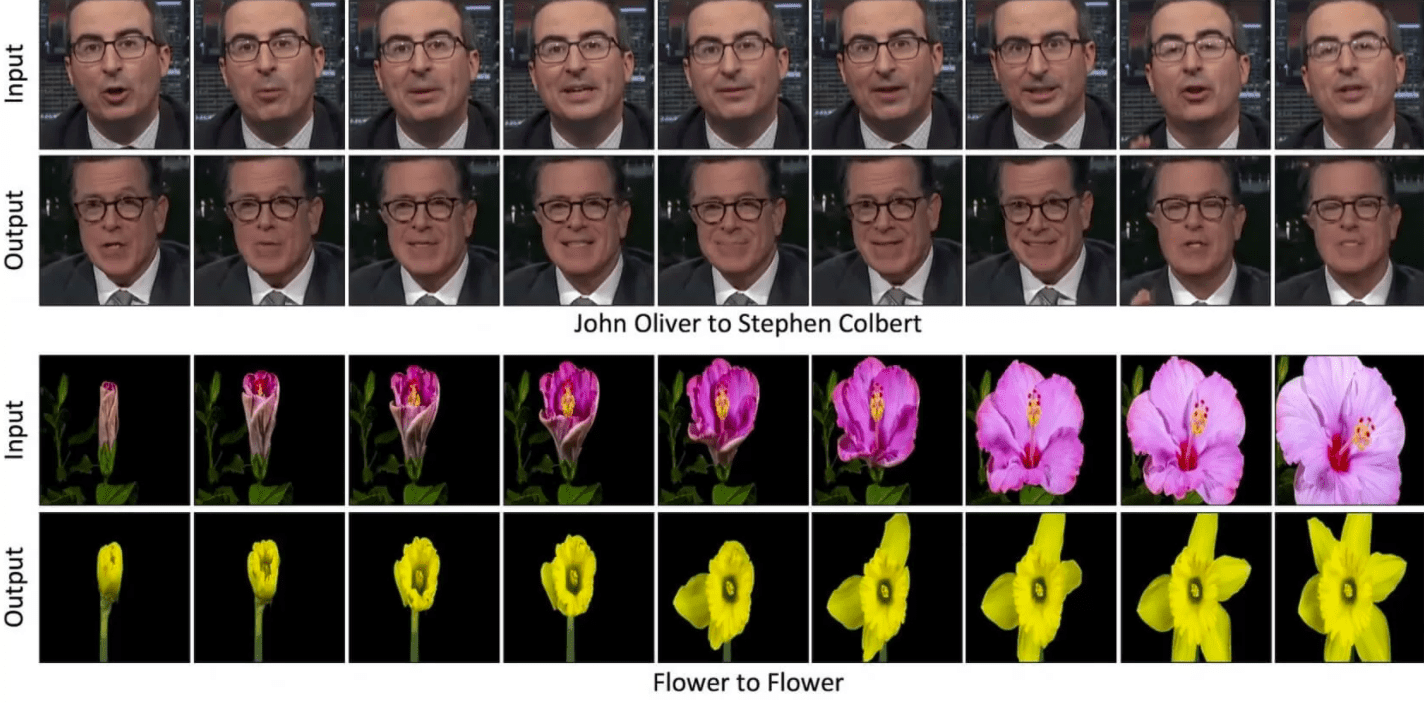

Image Source: Techspot

Deepfakes aren’t just some low-quality fake videos where a person’s face is replaced by another person’s face cut out and can easily be identified as fakes with just one look. As technological advances came through the application of GANS or generative adversarial networks, the synthesis engine was taught to create better forgeries that are hard to detect, resulting in deepfakes!

With new videos of deepfakes appearing on the web almost every day, it won’t be wrong to say that videos of Barack Obama calling the now ex-president of the United States of America names or the presidential campaign of Tom Cruise are old news!

Get over the video of Facebook founder Mark Zuckerberg where he reveals how the social networking platform ‘owns’ its users and uses the data to manipulate them! Now, there are creepy deepfake videos of Keanu Reeves doing the rounds, leaving the fans of The Matrix’ actor confused!

You see, deepfake is no longer limited to putting one actor in place of another (remember the video of Will Smith as Neo in The Matrix video). Today deepfakes have gone to another extent where someone phony is impersonating Reeves and even garnering millions of likes and followers, which is pretty unsettling!

There are rumors of the legendary actor Bruce Willis selling his “digital likeness rights” to a deepfake company. We don’t know whether it is true or not. But it tells us a lot about the growing invasion of deepfakes into the digital world!

You must have come across many deepfake memes or videos that have entertained you and made you laugh.

There are instances where people applauded the deepfake videos and found them amusing, such as the character of Princess Leia from Star Wars Rogue One remastered by deepfake or the deepfake video of Tom Cruise playing Marvel’s Iron Man.

But unfortunately, the use of deepfakes never stayed limited to creating only fun and amusing content. They garnered worldwide attention for their negative applications, such as creating pornographic videos, fake news, revenge porn, financial fraud, and hoaxes.

Yes, just as creating pornography featuring a person without his/her consent is possible with deepfake; so is creating political videos where political leaders are seen saying things they never really said. Take for example the deepfake video of the US President Donald Trump insulting Belgium.

While men are inserted in deepfakes mostly as a joke, for example, Nicolas Cage’s face is superimposed onto President Donald Trump’s or the parody of Better Call Trump: Money Laundering, etc.; the deepfake videos of women tend to be pornographic.

So, there is no doubt that if not restrained or taken necessary methods to detect and prevent deepfake attacks, fraudsters can use deepfake technology to threaten reputation, cybersecurity, corporate finance, political elections, and individuals.

Deepfakes are a subset of AI or Artificial Intelligence. They leverage the powerful techniques of AI and machine learning to generate and manipulate visual and audio content to deceive others.

However, only AI comes to the rescue to fight this AI-based technology. Let us know how AI can help to detect deepfakes when a trained neural network can not!

A researchers’ group from the University at Albany, SUNY, has found that unnatural eye movements or odd eye blinking speeds or patterns of the video’s subject can be a way to detect deepfake videos with the help of AI.

As GANs or Generative Adversarial Networks use still images as source material, the blinking speeds or patterns cannot be replicated well in the generated deepfake videos. And it is with AI networks that Siwei Lyu, a computer scientist, and his team of researchers identified this flaw successfully.

But the quality of deepfake videos is evolving. Hence, a thorough analysis of blink patterns and rate or speeds can still help detect deepfake videos, but the accuracy level of detection is decreasing.

A researchers’ group from the University at Albany, SUNY, has found that unnatural eye movements or odd eye blinking speeds or patterns of the video’s subject can be a way to detect deepfake videos with the help of AI.

As GANs or Generative Adversarial Networks use still images as source material, the blinking speeds or patterns cannot be replicated well in the generated deepfake videos. And it is with AI networks that Siwei Lyu, a computer scientist, and his team of researchers identified this flaw successfully.

But the quality of deepfake videos is evolving. Hence, a thorough analysis of blink patterns and rate or speeds can still help detect deepfake videos, but the accuracy level of detection is decreasing.

Due to the present constraints related to processing resources, processing the still images used to make a deepfake video at a fixed resolution is necessary. However, as the camera angle zooms out and in or shifts, the resolution and size of a face in a video keep changing, which turns the use of still images into a limitation of deepfake Generative Adversarial Networks (GANs).

And it is by exploiting this weakness that the group of researchers from the University of Albany has found a way to detect deepfake videos. Yes, they trained their Artificial Intelligence networks in such a way that they can detect artifacts in the facial features warping that is left behind as a result of the changing face size, therefore, resolution. The researchers achieved above 90% accuracy in detecting deepfake videos through this AI algorithm.

Another team from the University of California, Riverside, or UCR, led by Electrical and Computer Engineering professor, Amit Roy-Chowdhury, had developed a neural network architecture that detected manipulated images at the high precision pixel level. Using good AI against bad AI, i.e., deepfakes, this team showed that unnatural smoothening or feathering would appear in pixels on the boundaries of the objects in it when someone tampers with a video.

Imagine what could happen if we could analyze the complete context of a deepfake video instead of just analyzing single frames of it for pixel artifacts.

Well, early in 2019, joint researchers from USC and UC Berkeley released a study where AI was trained to identify the patterns of posture and gesture changes regarding the information that they were conveying.

Since GNAs depend on still images to produce deepfake videos, learning and reproducing these behaviors (posture, gesture, and tone) is virtually out of the question for them. As a result, naturally, this AI technique was announced to help in detecting deepfake videos with a 95% accuracy rate (expected to go as high as 99% soon).

Imagine what could happen if we could analyze the complete context of a deepfake video instead of just analyzing single frames of it for pixel artifacts.

Well, early in 2019, joint researchers from USC and UC Berkeley released a study where AI was trained to identify the patterns of posture and gesture changes regarding the information that they were conveying.

Since GNAs depend on still images to produce deepfake videos, learning and reproducing these behaviors (posture, gesture, and tone) is virtually out of the question for them. As a result, naturally, this AI technique was announced to help in detecting deepfake videos with a 95% accuracy rate (expected to go as high as 99% soon).

Image Source: Analytics Insight

The deepfake detection techniques are really impressive, especially where the same AI is being used against the AI that helps develop deepfakes.

As deepfakes are becoming more convincing with each passing day. Some leading tech companies have even taken the initiative to create their own deepfake detection technique. As per a report, Intel’s FakeCatcher system can differentiate videos of real people from deepfake videos with 96% accuracy, and that too, in a matter of milliseconds.

Even LinkedIn, the world’s largest professional network community, is using AI-powered “deep-learning-based model” to determine whether LinkedIn profile photos are AI-generated deepfake facial images. So Yes, detecting deepfakes with AI is possible.

But when it comes to using AI to disrupt and destroy the very creation of deepfake videos, the results are still unknown. Once disinformation is out there in the public domain in the form of deepfakes, it is difficult to combat them and erase its existence.

In the coming years, deepfakes will become more common, penetrating areas that we can’t even guess now. While its typical applications will continue to be prevalent in all forms of media, including social, political, and commercial campaigns, be ready for witnessing more serious forms of impersonations (e.g., fake persons conducting exams or providing fraudulent online services).

As per a report of recent deepfake attacks, there have been instances where fraudsters have started using deepfake technology to conduct interviews for remote jobs. Even an increase in the use of synthetic videos and images of other people has been observed to dupe authentication systems. The threat of audio deepfakes, where employees are made to think the deepfake voice is of the senior management, is increasing.

Since deepfake technology makes it extremely difficult to differentiate real pictures from fake ones, enterprises, organizations, and users must stay updated about the changing technologies like AI, Blockchain, etc., to protect themselves from deepfake attacks.

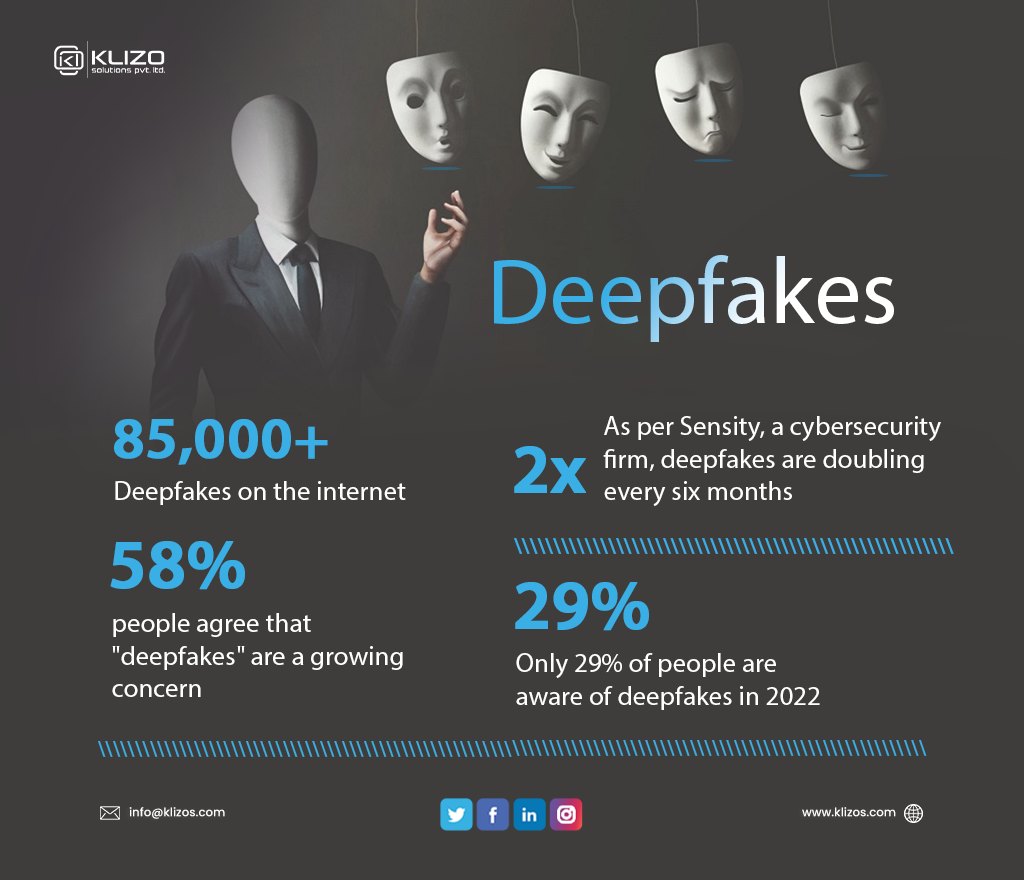

One by one, the entire world is waking up to the threats of deepfakes. But are we ready to fight this AI-enabled technology? The answer is still unknown as, even in 2022, only 29% of people know what deepfakes are!

Though scientists and technology experts are tirelessly trying to end this ‘tech weapon’, we are yet to get any final solution. While the deepfake creators are leveraging AI capabilities to come up with more hard-to-detect deepfake videos, the defenders are trying to use the same technology to detect and disrupt deepfake activities.

Only the future can unfold whether combating Deepfake AI with AI will be possible! With more and more research being done on using AI against deepfake, we can only hope for the best.

Previous article

Achieve Digital Transformation With Enterprise Automation In 2023

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.