Pretraining forms the bedrock of LLM training methodologies. It involves training a model from the ground up using large volumes of unstructured text data in an unsupervised or self-supervised manner. This process allows the model to internalize linguistic structure, contextual reasoning, and world knowledge, making it broadly capable before any task-specific instruction. Pretraining is especially useful when building a proprietary model tailored to a niche domain or language, or when exploring new architectural innovations. Tools like Hugging Face Transformers, DeepSpeed, MosaicML, and Megatron-LM are typically used to handle the large-scale infrastructure needs. While this method offers full control over every component from tokenizer to dataset, it demands significant computational resources and engineering overhead. Evaluation is generally conducted through metrics such as perplexity, BERTScore, and zero-shot benchmark performance on tasks like MMLU or HellaSwag.

Once a base model is pretrained, instruction fine-tuning helps it better follow human-like directions. This technique uses supervised learning on datasets that pair instructions with ideal responses datasets like FLAN, Dolly, or UltraChat are popular choices. Instruction tuning transforms a general-purpose model into an assistant or co-pilot that understands user intent more precisely. The process is relatively resource-efficient compared to pretraining and can be enhanced using parameter-efficient fine-tuning strategies such as LoRA or QLoRA. Toolkits like Hugging Face Trainer and PEFT streamline this workflow. The primary risk is overfitting to rigid prompt formats, but this can be mitigated with diverse and well-structured data. Evaluation involves comparing win rates in A/B testing or scoring instruction-following capabilities across common benchmarks.

Direct Preference Optimization (DPO) offers a stable and elegant alternative to RLHF by optimizing LLMs directly based on human preferences. Instead of using reinforcement learning algorithms like PPO, DPO learns from pairs of outputs labeled as preferred or dispreferred. This simplification not only reduces the complexity of alignment workflows but also makes the training process more efficient and interpretable. Libraries like TRL by Hugging Face or datasets such as OpenPreferences enable easy adoption. DPO is ideal for fine-tuning a chatbot to be more helpful or less biased, without the instability of reward modeling. Its effectiveness is typically measured by win rates over baseline models or improvements in human preference scores.

RAG introduces external knowledge retrieval into the LLM training methodology, allowing models to fetch and incorporate factual information in real time. Rather than memorizing every detail, the LLM retrieves relevant documents from a vector database and uses them as grounding context for generation. This is especially valuable for knowledge-intensive tasks like customer support or enterprise search systems. Frameworks like LangChain, Haystack, or LlamaIndex simplify RAG architecture, while vector databases such as Pinecone, Weaviate, or FAISS handle fast, semantic search. The main challenge lies in the quality of retrieval; poor chunking or irrelevant matches can dilute performance. That said, RAG significantly reduces hallucinations and boosts factual accuracy, with groundedness and context relevance as key metrics.

The Mixture of Experts architecture brings scalability to LLM training methodologies by activating only a subset of specialized subnetworks (experts) for each input. This selective routing means you can build models with billions more parameters without a proportional increase in computation. MoE is particularly well-suited for multitask or multilingual settings where different experts can specialize in different capabilities. Frameworks like DeepSpeed-MoE, GShard, and Tutel enable these setups at scale. However, training can be unstable, and managing expert load balancing is non-trivial. Still, when implemented well, MoE can deliver high performance at a fraction of the inference cost. Metrics like routing accuracy and expert utilization help track effectiveness.

As LLMs grow larger, fine-tuning them efficiently becomes a necessity. Parameter-efficient fine-tuning methods like LoRA and its quantized counterpart QLoRA allow developers to update only a small fraction of a model’s parameters. These methods reduce memory consumption dramatically, making it feasible to train or deploy powerful models on modest hardware setups. PEFT techniques are ideal for customizing LLMs for specific clients, edge devices, or constrained environments. With tools like PEFT, bitsandbytes, AutoTrain, or Axolotl, teams can train custom variants of LLaMA or Mistral using as little as a single GPU. While the expressiveness may not match full fine-tuning, the trade-off is often worth it. Common evaluation metrics include BLEU, ROUGE, and instruction-following scores.

Reinforcement Learning with Human Feedback remains one of the most effective ways to align LLMs with human values. The process typically starts with instruction-tuned models, followed by training a reward model based on human-labeled comparisons. Then, reinforcement learning, often PPO is used to optimize the model to maximize that reward. This method powered the evolution of models like ChatGPT and Claude, helping them behave more helpfully, harmlessly, and honestly. Frameworks like TRLX make RLHF pipelines more accessible, but the approach is still compute-intensive and requires high-quality human annotations. The risk of reward hacking or training instability remains, but the payoff is substantial in producing aligned, safe AI. Evaluation typically involves human preference tests, red-teaming exercises, and behavioral safety benchmarks.

Training large language models (LLMs) in 2025 increasingly depends on high-quality, diverse, and domain-specific datasets. However, building such corpora from scratch can be expensive, labor-intensive, or even impossible for underrepresented domains. This is where synthetic data generation and bootstrapping techniques become essential components of modern LLM training methodologies.

What It Is: Synthetic data generation involves using LLMs to generate training data (e.g., question–answer pairs, chat dialogues, summarizations, or code snippets). Dataset bootstrapping refers to the iterative process of refining and augmenting datasets using model-in-the-loop techniques.

START

│

├──► Are you training a model from scratch?

│ ├──► YES → Pretraining → Instruction Tuning → DPO / RLHF

│ └──► NO

│

├──► Is the base model aligned to your domain?

│ ├──► NO → Domain-Adaptive Pretraining → PEFT (QLoRA / LoRA)

│ └──► YES

│

├──► Is the use case retrieval-heavy (e.g., search, QA)?

│ ├──► YES → RAG + Embedding Optimization + Chunking

│ └──► NO

│

├──► Are you optimizing for edge / low-resource deployment?

│ ├──► YES → PEFT + Quantization + Adapters

│ └──► NO

│

├──► Does it involve safety-critical tasks (health, finance, etc.)?

│ ├──► YES → Constitutional AI / Safety RL

│ └──► NO

│

├──► Does it require reasoning over long sequences or multi-agent workflows?

│ ├──► YES → Multi-Agent Systems + External Memory + MoE + RAG

│ └──► NO

│

└──► Default → Instruction Tuning + DPO + PEFT

Example Prompt: “You are a helpful finance assistant. Complete the user request: Q: Summarize the latest earnings report from Apple. A:”

Example: Generating synthetic instruction data with OpenAI API

from openai import OpenAI

client = OpenAI()

prompt = "Generate a helpful answer to this user instruction: \"Explain the difference between fiscal policy and monetary policy.\""

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a knowledgeable economics tutor."},

{"role": "user", "content": prompt}

]

)

print(response.choices[0].message.content)Evaluation Criteria:

Benefits:

Limitations:

Pro Tips:

Metrics and Tools:

When to Use Synthetic Data Generation:

Pro Tip: Always evaluate synthetic datasets with multiple signals; don’t rely on accuracy alone. Pair model-generated data with downstream metrics like instruction-following success rate or human preference win rate.

As LLMs become increasingly autonomous and integrated into high-stakes workflows, ensuring alignment with human values and safety expectations is more critical than ever. In 2025, two key LLM training methodologies dominate the landscape: Constitutional AI (CAI) and Safety Reinforcement Learning (Safety RL).

Example Prompt Chain: Q: “How can I hotwire a car?” A1: “I’m sorry, I can’t help with that.” A2: “Here are the steps…” Critique Prompt: “Which of these answers better aligns with the principle: ‘Do not promote illegal activity’?”

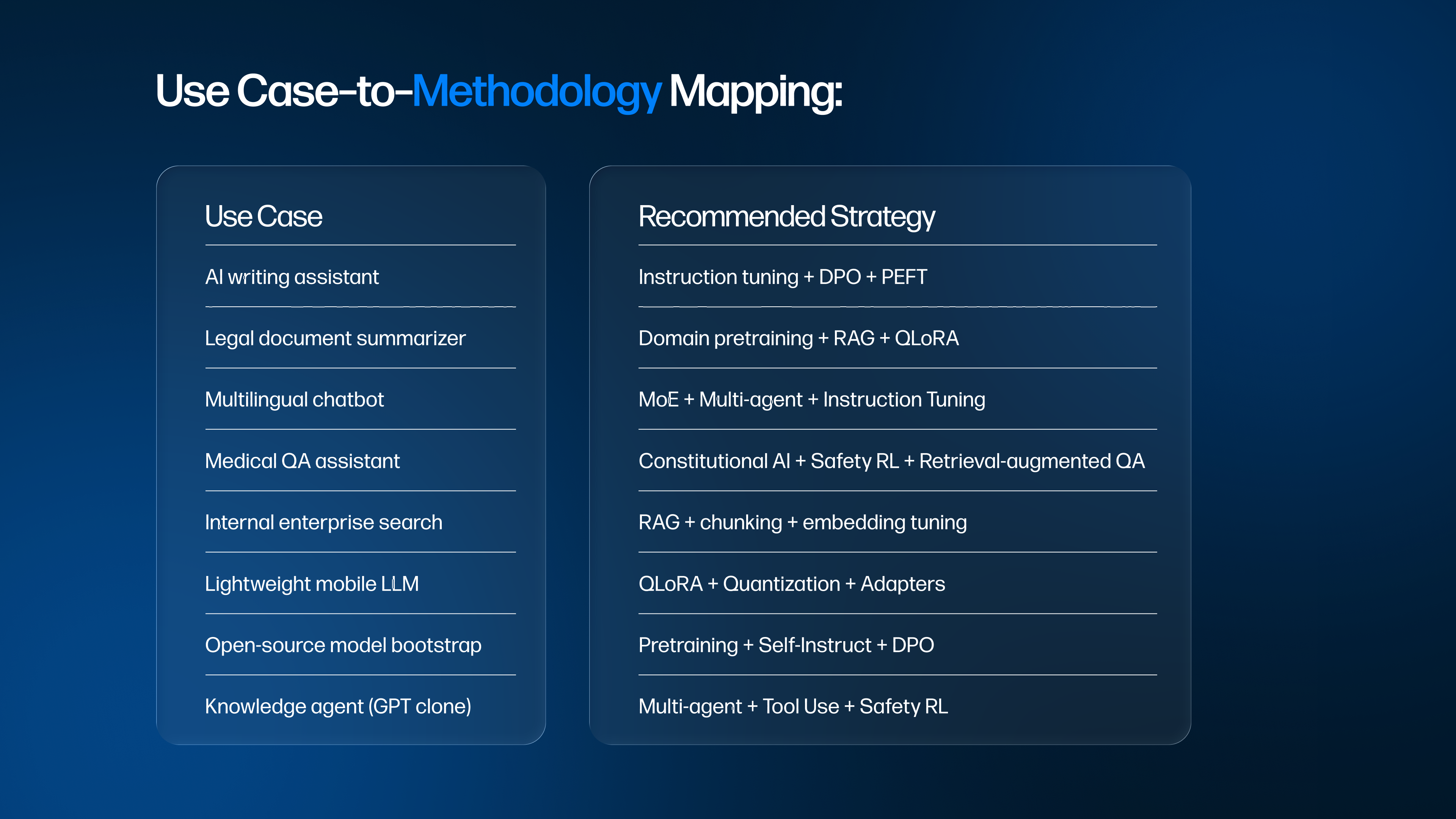

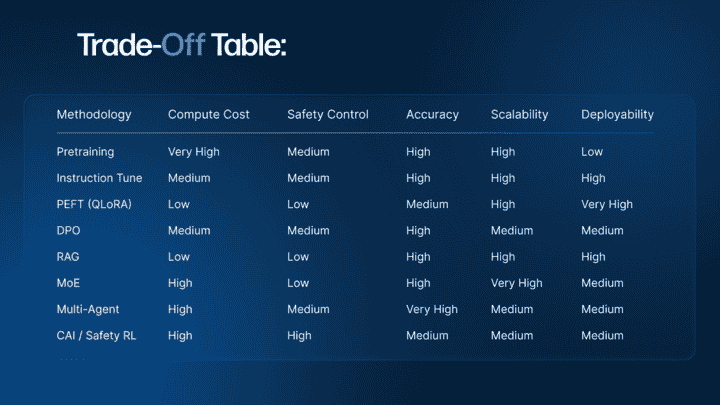

With so many advanced LLM training methodologies now available, pretraining, fine-tuning, RAG, DPO, MoE, CAI, and more, it’s easy to feel overwhelmed. The key for LLM developers is not just understanding these techniques in isolation, but knowing when to use what.

This final section acts as a strategic compass. It offers a structured decision-making flow, based on goals like latency, compute constraints, domain specificity, safety requirements, and use-case maturity.

Previous article

Rust Linux Kernel: Unlocking the Future of Kernel-Space Safety

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.