Having nightmares about resource management for your AI applications? Say hello to Kubernetes AI deployment!

With AI’s increasing prominence in the digital workspace, many companies are striving to deploy, scale, and manage AI-driven applications efficiently. After all, the unique demand of AI apps requires a flexible and scalable infrastructure that can handle issues like rapid scaling, heavy computation, and complex resource allocation.

That’s where Kubernetes AI Deployment comes to the rescue!

Imagine the servers of your AI application as a bunch of kids at a chaotic birthday party- constantly moving, demanding attention, and occasionally getting into trouble. Now, Kubernetes is like the local magician you hired to keep all the kids engaged and perfectly arranged in one place.

In this article, we will dive deep into the features and benefits of Kubernetes AI deployment, and how this amazing container orchestration technology is revolutionizing the application development industry.

Developed by Google and maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes or K8s is an absolute rockstar when it comes to automating, deploying, and managing containerized applications. Whether it’s a public cloud or a hybrid environment, Kubernetes AI deployment works like a charm across clusters of machines.

Before we dive into the nitty gritty of Kubernetes orchestration and its advantages, let’s take a quick look at the prime elements of Kubernetes.

Pod signifies the smallest deployable unit in Kubernetes. It can host one or more tightly coupled containers sharing the same storage and namespace.

Node is a single machine within the Kubernetes cluster. This physical or virtual machine runs on one or more pods. The Kubernetes orchestration relies on the collaboration of multiple nodes.

A cluster is basically a group of nodes. It runs a master node (or a control plane) which is responsible for the distribution of workload among all the nodes.

The master node manages the cluster’s workload and monitors its state. The main components of a master node include API servers, ETCD, Scheduler, and Controller Manager.

Kubelet is an agent that runs on each node, managing the state of the pods on that node. It communicates directly with the master node to ensure the right activity of the containers.

Kube Proxy Handles network communication within the cluster. It makes the requests reach the right pods, ensuring all services are accessible.

Kubernetes orchestration has taken the AI-based tech industry by storm, ensuring a smooth and seamless experience while managing the desired state of a cluster in AI-based applications.

Before Kubernetes AI integration was introduced, cluster management in AI-driven apps was a real nightmare for developers! Also, scaling neural networks with Kubernetes feels like a breeze as it eliminates the complexity of managing clusters and nodes individually.

Managing containers and microservices can sometimes feel like fighting the final boss of your AI deployment game. Kubernetes is like Batman of the cloud-native world – cool, strong, efficient, and always ready to tackle any bad situation. Let’s find out why your cloud-native Arkham City needs a caped crusader like Kubernetes.

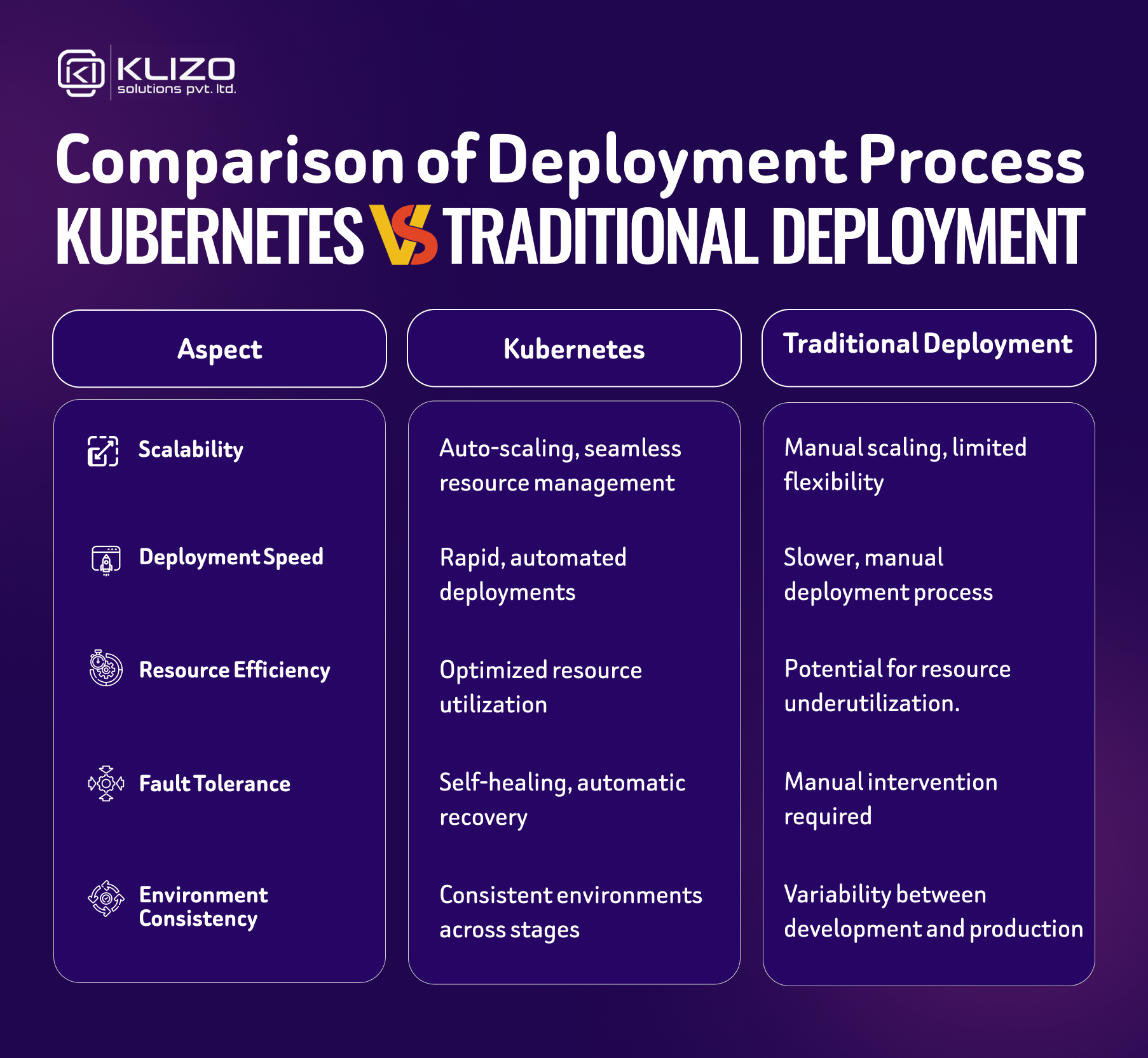

AI applications are composed of various interconnected elements like machine learning models, data processing engines, storage systems, and monitoring tools. Now, the manual deployment of all these components in a complex infrastructure can be extremely time-consuming and error-prone. Container orchestration AI like Kubernetes tackles this challenge with the automation of containers, which balances the load across multiple nodes.

The native auto-scaling feature of Kubernetes AI deployment allows users to effortlessly adjust resource allocation based on workload demands. This kind of dynamic scaling is very useful during the training and inference phase of an AI application.

Example:

For a company training a deep neural networking model, Kubernetes orchestration allows managing resources automatically. If the AI model requires more processing power, then Kubernetes allocates additional resources.

AI-driven applications are known for being resource-hungry, consuming CPU, GPU, and memory resources for processing large datasets while running complex models. Kubernetes provides advanced resource management features, enabling users to optimize resources by dynamically allocating CPU, GPU, and memory resources across clusters on demand.

Also, the multi-tenancy feature of Kubernetes allows multiple teams to share resources on a single cluster without any kind of interference. Teams can manage resource usage with namespaces, quota, and node pool. Therefore, choosing Kubernetes AI integration means organizations don’t have to over-provision or under-utilize their hardware resources, cutting a significant amount of cost.

Example:

In a scenario where a data science team and a developers’ team are using the same infrastructure, the namespace feature of Kubernetes will make sure that the resource allocation remains isolated according to the demand of each team.

One of the greatest things about Kubernetes is its abstraction of the underlying infrastructure, allowing AI applications to run seamlessly across different environments, whether on-premises or in the cloud.

Kubernetes AI deployment provides a consistent platform to package and deploy containerized applications. It allows teams to test, develop, and deploy AI models across different environments. In short, Kubernetes pods for AI workloads enable teams to focus on container orchestration AI rather than infrastructure-specific configurations.

Example:

Imagine a global team that operates AI models from various regions. They decided to train the AI models in an on-premise data center but decided to deploy them in a cloud environment. Kubernetes AI deployment enables this seamless transition, ensuring a standardized workflow across different platforms.

Managing the machine learning lifecycle is very crucial for AI applications, involving preprocessing, data ingestion, training, validation, and deployment. Kubeflow provides the right environment for all of these, ensuring smooth machine learning on Kubernetes.

Kubeflow offers a streamlined way to manage the entire ML pipeline, integrating data sources, automating model training, and simplifying deployment. It helps container orchestration AI teams with the tools needed to manage pipelines, track experiments, and monitor models in production.

Example:

A data science team might use Kubeflow for machine learning on Kubernetes to automate their ML workflow. From data preprocessing to model validation, with Kubeflow Pipelines, they can visually design workflows, set up automated model training, and integrate performance metrics tracking, enhancing productivity and reducing time-to-deployment.

The mission-critical AI models need high availability, as certain interruptions and downtime can have significant consequences. Kubernetes has high fault tolerance due to automated failover management, restarts, and replication of containers across nodes.

Kubernetes’ self-healing capabilities ensure resilience in AI applications where real-time processing and high availability are required, providing certain benefits for applications in sectors like healthcare, finance, and autonomous driving.

Example:

In an e-commerce app utilizing AI for real-time recommendations, Kubernetes AI performance tuning plays a crucial role in maintaining continuous availability. By restarting failed pods or redistributing them to healthy nodes, Kubernetes ensures the recommendation engine stays operational even amidst hardware failures.

AI workloads like deep learning tasks are extremely resource-hungry and require a great amount of GPU usage. Kubernetes pods for AI workloads have native support for GPUs, allowing organizations to allocate GPU resources according to their demands. It also allows shared GPU usage across multiple AI workloads.

With Kubernetes’ GPU scheduling capabilities, users can configure GPU resources at a granular level, ensuring that each workload has access to required computational power without over-provisioning.

Example:

A video analytics company can leverage Kubernetes AI deployment to allocate GPU resources for object detection and classification models throughout its product line. By sharing these GPU resources across various applications, they can ensure high performance without the need for additional hardware investment, reducing operational costs while maintaining efficiency.

Kubernetes offers various security features to manage AI workloads securely. Due to its network policies which enable Role Based Access Control (RBAC) and secrets control, only authorized users can access sensitive data or computational resources. This is very crucial for applications that handle sensitive data like financial transactions or medical records.

Kubernetes orchestration also allows teams to define resource access rules, encrypt sensitive data, and manage identity and permissions. For applications where data security regulations like GDPR and HIPAA are applicable, Kubernetes AI deployment works as a life-saver in some cases.

Example:

In a healthcare application where thousands of user data related to medical records get stored, Kubernetes’ RBAC feature ensures that only authorized members have access to sensitive information. Network policies can also control traffic flow between different parts of the application, adding another layer of security.

For AI applications, keeping costs low is super important, especially as they scale and require more resources. Kubernetes AI deployment supports cost-efficient deployment methods and management of AI workloads through auto-scaling, multi-tenancy, and resource allocation controls.

Also, Kubernetes’ ability to run across various cloud providers enables organizations to adopt a multi-cloud strategy, selecting cost-effective platforms for different parts of their AI infrastructure. The ‘pay-as-you-grow’ structure of Kubernetes orchestration allows organizations to provision resources incrementally based on demand.

Example:

In a scenario where a financial services company is deploying AI for risk analysis, it can use Kubernetes’ auto-scaling to manage AI workloads. In a high-demand situation, such as quarterly financial reporting, the system automatically scales up to handle increased data processing.

According to a survey report published by CNFC, 98% of the respondents use AI applications built on Kubernetes AI deployment to run data-intensive workloads on the cloud.

With such benefits ensuring efficiency in resource management and faster workflow, it is no wonder Kubernetes AI deployment is gaining huge popularity among the tech giants. Let’s take a look at a few real-world applications of Kubernetes.

As businesses aim to modernize applications and optimize IT infrastructure, Kubernetes stands out as a crucial technology for container orchestration AI, enabling seamless deployment, scaling, and management of applications. Now, let’s take a look at a few big organizations that adopted Kubernetes cloud AI solutions.

One of the early adopters of microservices, Netflix uses AI deployment Kubernetes to simplify and streamline deployments. By orchestrating its vast array of microservices with Kubernetes, Netflix ensures resilience, scalability, and efficiency, allowing developers to deploy new features with less time and handle massive user loads effectively.

GitLab leverages Kubernetes AI deployment to power its CI/CD pipeline automation process. With Kubernetes, GitLab can run and scale thousands of CI/CD tasks concurrently, allowing developers to test code, deploy updates, and quickly iterate on new features.

Global bank HSBC adopted Kubernetes to manage its application across the private and public cloud. HSBC achieved flexibility in workload placement by leveraging Kubernetes AI integration, enabling them to optimize resource costs, improve data locality, and meet strict regulatory requirements.

Airbnb leverages Kubernetes configurations for ML models, allowing data scientists to run complex machine learning and AI analytics models in a distributed and scalable environment. Kubernetes orchestrates resources to ensure efficient processing of large datasets while enabling reproducibility and consistency in ML workflows.

YouTube leverages Kubernetes cluster management for AI to orchestrate its global content delivery, enabling scalable video delivery based on demand. This automation of resource allocation and load balancing ensures consistent video quality for users across all devices and regions. It helps YouTube to handle spikes in demand without disrupting the service quality.

According to the 2023 CNFC Annual Survey, 84% of the cloud-native community is either using or planning to migrate to Kubernetes.

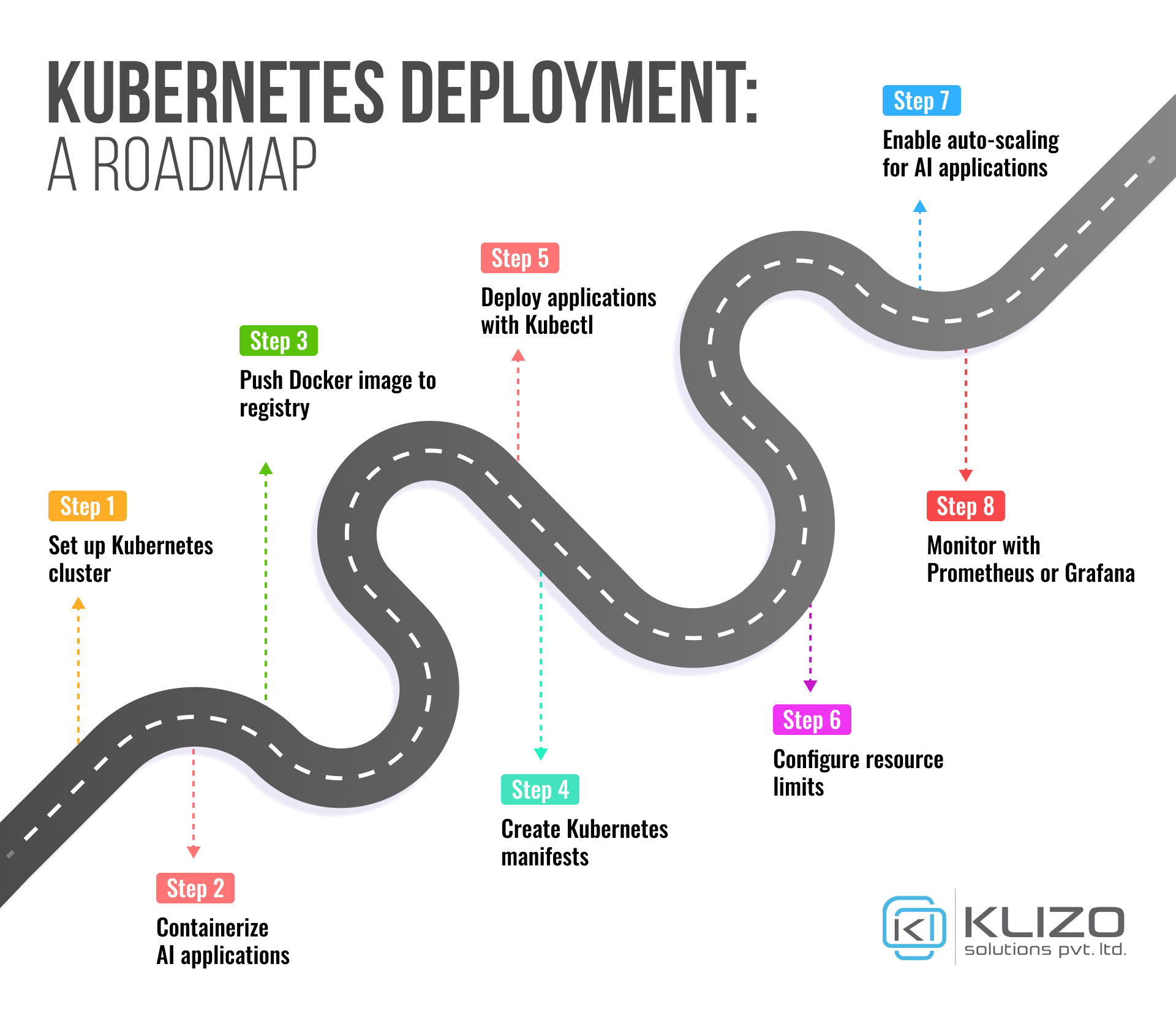

Now, despite having so many real-world applications, the implementation and deployment of Kubernetes might feel a bit confusing and overwhelming at first. Well, no need to worry as here we’ll explain how to manage and deploy AI apps on K8s like a pro!

You need to select a Kubernetes platform and then follow the platform-specific instructions to set up your Kubernetes cluster. A few popular Kubernetes platform options are-

Create a Docker file to package your AI application into a container. Upload the Docker image to a container registry like Docker Hub or your cloud provider’s registry.

Write YAML files to define the desired state of your application, including deployments, services, and configuration maps. Define persistent volume for data storage.

Apply the manifests and then monitor the deployment of your AI application.

The Horizontal Pod Autoscaler (HPA) needs to be configured to scale pods based on CPU usage or custom metrics. Seamlessly manage your resource allocation using Kubernetes resource quotas and limits.

Automate the deployment process by configuring CI/CD pipelines. Then use monitoring tools to keep an eye on your application’s performance and logs. The most popular monitoring tools are-

FAQ

Kubernetes can automate the deployment, scaling, and management of machine learning models, ensuring high availability, efficient resource utilization, and smooth integration with different tools and frameworks.

Kubernetes ensures seamless ML model deployment, automated scaling, and efficient resource usage. It enhances performance and scalability with simplification of the AI model management.

Optimize AI on Kubernetes by leveraging GPU scheduling, configuring resource limits, using auto-scaling for resource allocation, employing persistent storage for data management, and integrating CI/CD pipelines for streamlined deployments. Use monitoring tools like Prometheus to ensure performance. |

The future of Kubernetes AI deployment is full of exciting advancements. With its expanding ecosystem, enhanced security features, and seamless integration with AI/ML workflows, Kubernetes is rocking the cloud-native world.

Plus, Kubernetes with ML models are capable of self-healing, load balancing, and seamless updates, ensuring availability and efficient resource use. In short, it’s the one-stop solution for all your AI deployment needs.

And to make the most of this “one-stop solution”, you need professionals specializing in deploying AI-driven applications using Kubernetes. At Klizo Solutions, we have an expert team who can help you harness the full potential of Kubernetes, ensuring your AI applications are robust, scalable, and seamlessly integrated.

Previous article

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.