Edge AI pushes machine learning inference to the network perimeter—on cameras, gateways, and base stations—so IIoT and smart city systems act on events in milliseconds without round-tripping to the cloud. Using MEC for placement, 5G/URLLC for transport, and an MLOps toolchain tailored to embedded devices, organizations gain low latency, higher reliability, and better data control. Start with a thin pilot (one use case, one site), select models that compress well, implement zero-trust at ingress, and use OPC UA for industrial interoperability. Measure success via end-to-end latency, uptime, safety incidents avoided, OEE gain, and maintenance cost reduction.

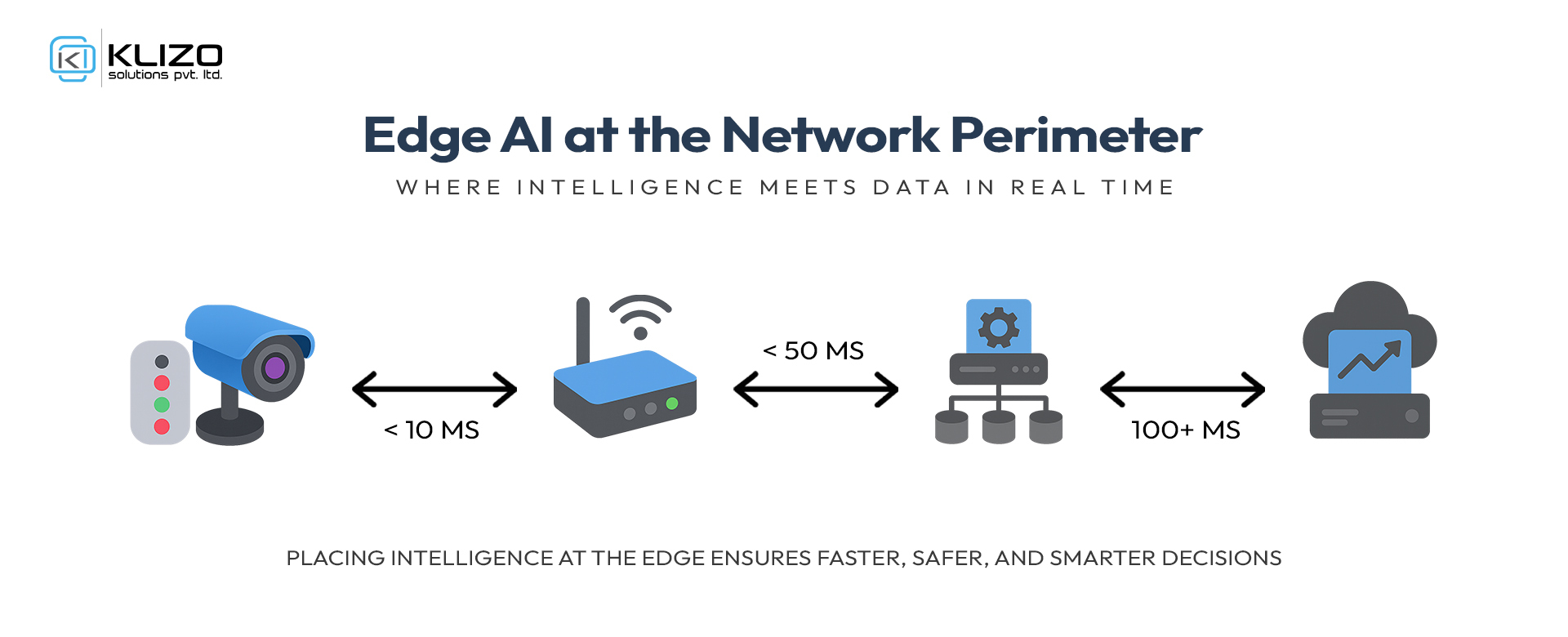

Edge AI means running trained models close to where data is generated—on devices, gateways, rugged PCs, or MEC nodes. Doing inference at the network perimeter reduces round-trip latency, avoids bandwidth spikes, and keeps sensitive data local by design. On a production line, sub-100 ms reactions can prevent equipment damage; in a city, sub-50 ms insights improve traffic flow, pedestrian safety, and emergency response.

MEC, standardized by ETSI, places compute and storage in or near the radio access network, enabling low-latency services and local breakout for AI workloads. It unifies telco and IT cloud patterns so applications can run “inside the RAN” while still looking like cloud-native microservices.

In a public-network scenario, a common pattern is:

Device layer: cameras, sensors, PLCs

Access layer: 5G gNodeB with URLLC option for deterministic performance

MEC platform: containerized AI microservices (video analytics, anomaly detection), local message bus, feature store cache

Mid-haul/backhaul: selective event uplink to cloud for archiving and retraining

ETSI’s MEC reference architecture defines system, host, and platform components plus APIs for app lifecycle management and traffic rules, making this multi-vendor by default. ETSI+1

In plants, OPC UA provides secure, vendor-neutral data exchange between controllers and edge runtimes. Modern gateways run container stacks that bridge OPC UA data into AI inference services and enforce safety interlocks back to SCADA/PLC if anomalies are detected. OPC UA is the de facto interoperability standard for secure and reliable industrial data exchange. OPC Foundation+2OPC Foundation+2

Placement tip: Run preprocessing (e.g., background subtraction) on-device, inference at gateway, and multi-stream correlation at MEC. This layered split balances power, thermals, and latency.

Three levers drive performance:

Compute locality: Every cloud hop adds 20–100+ ms. MEC collapses the path to one or two L2/L3 hops.

Radio profile: 5G URLLC targets ultra-low latency and extreme reliability for industrial control—e.g., 50 ms end-to-end latency targets for certain scenarios, with “six nines” reliability. 3GPP

Model footprint: Smaller models (quantized INT8/INT4), fused operators, and tensor RT optimizations reduce inference time drastically on edge SOCs.

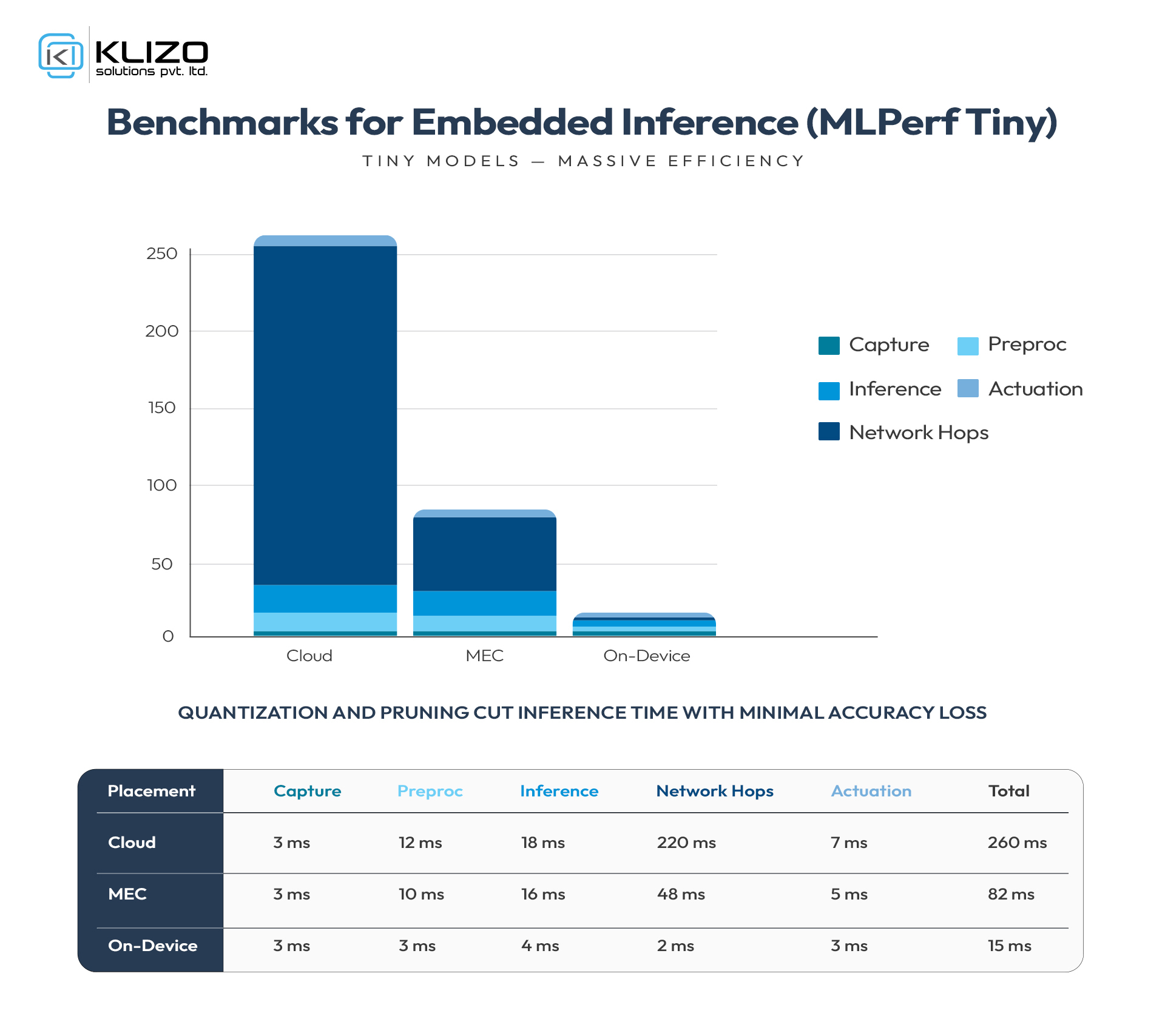

Rule of thumb: For closed-loop control, design for p95 latency (not average). Budget each stage (capture, encode, feature extraction, inference, actuation). With MEC and tuned radio, sub-50 ms end-to-end is realistic for many vision tasks; microcontroller-class anomaly detection can act in single-digit milliseconds when models are tiny (as benchmarked by the MLPerf Tiny working group).

Keeping data at the perimeter reduces exposure, but security must be explicit:

Device baseline: Implement NIST IR 8259A capabilities—secure boot, unique IDs, authenticated updates, and logging. NIST Computer Security Resource Center+1

Zero-trust network access: Mutual TLS, short-lived certs, and policy checks on every call (even inside MEC).

Data minimization: Store events, not raw streams; purge local buffers on success.

SBOM + signed containers: Required for supply-chain integrity on gateways and MEC hosts.

Physical tamper: Conformal coatings, secure elements/TPMs, and watchdogs on unattended outdoor nodes.

Treat edge deployments like any other production ML—just with more constraints.

Pipeline essentials

Model registry with hardware tags: Associate artifacts to edge targets (Jetson, x86, ARM, MCU).

Cross-compilation & optimization stage: Export to ONNX, then build platform-specific engines (TensorRT, OpenVINO, ONNX Runtime EP).

Fleet-safe rollouts: A/B and canary by device cohort; automatic rollback on latency/accuracy regressions.

Drift monitoring: Lightweight telemetry—confidence scores, class histograms, and edge-calculated KPIs—to trigger retraining.

Offline retraining loop: Periodically export embeddings or compressed feature sets to cloud; never ship raw PII.

Tooling note: NVIDIA Jetson modules and SDKs are widely used for perimeter vision and robotics, offering energy-efficient AI compute plus an optimized software stack for real-time inference.

For Edge AI, performance = accuracy × efficiency × thermal headroom.

Quantization: INT8 (or FP8/FP4 on newer accelerators) shrinks memory and boosts throughput; calibrate with representative data.

Structured pruning: Remove entire channels/filters to enable kernel-level speedups.

Knowledge distillation: Small student learns from a larger teacher; pair with quantization for best results.

Operator fusion: Fold BN + activation; leverage vendor runtimes for kernel fusion.

TinyML class: For MCUs, use MLPerf Tiny benchmarks to sanity-check feasibility and compare runtimes across libraries. MLCommons+1

5G URLLC: For motion control and safety, URLLC’s reliability (up to 99.9999% targets) and low latency profiles enable deterministic loops. MEC co-location further reduces tail latency. 3GPP+1

Wi-Fi 6/7: High throughput in factory cells; add private SSIDs, TSN profiles, and spectrum planning.

LPWAN (LoRaWAN/NB-IoT): Ideal for low-power sensors; run simple Edge AI locally (e.g., anomaly flags) then uplink short payloads.

Pittsburgh’s SURTRAC adaptive traffic control, an early exemplar of decentralized, edge-driven optimization, reported ~25% reduction in travel time and ~40% reduction in wait time at pilot intersections. While the original system predates modern deep learning, it validates the principle: local, real-time decisions outperform centralized polling on fast-changing queues. Wikipedia+1

Many European cities (e.g., Barcelona) now combine camera analytics with adaptive control; published reviews highlight AI-enabled analysis of real-time traffic feeds to optimize flow and safety. Irejournals+1

Edge AI pattern: camera → on-device motion vectors → gateway inference (vehicle/pedestrian states) → MEC correlation → signal timing actuation.

Outcome targets for a modern rollout:

15–30% shorter average delays at peak

10–20% lower emissions on corridors

Measurable reduction in red-light violations

An automotive supplier instrumented stamping presses with vibration and acoustic sensors. A quantized 1D-CNN ran on a rugged gateway (Edge AI) with p95 inference < 8 ms; features and event counts synced to MEC. After three months:

MTBF improved by 18%, unscheduled downtime dropped by 22%

Spare parts inventory optimized (fewer emergency shipments)

Operators received on-device alerts integrated via OPC UA to SCADA

(Note: Composite example based on common outcomes across vendor case libraries; performance feasibility aligns with MLPerf Tiny-class benchmarks and Jetson-class runtimes.)

Cost buckets: devices, gateways/SOCs, MEC nodes, connectivity (public/private 5G), platform licenses, integration, and operations (patching, model refresh).

Value buckets: avoided downtime, process yield uplift, reduced backhaul cost, safety/insurance improvements, and regulatory compliance.

Quick calculator (first year):

CapEx: (devices × unit) + (gateways × unit) + MEC cluster + install

OpEx: connectivity + platform + support + retraining/validation

Return: (downtime_saved × cost/hr) + (throughput_gain × margin) + (bandwidth_saved × egress_rate)

Aim for <18-month payback on a single high-value use case before scaling horizontally.

ETSI MEC: APIs and architecture for hosting applications at the edge of mobile networks. ETSI

3GPP URLLC: Profiles and requirements for ultra-reliable, low-latency communications in 5G. 3GPP

OPC UA: Secure, interoperable industrial data exchange across vendors and layers. OPC Foundation+1

NIST IR 8259A: IoT device security capabilities baseline used by many procurement teams. NIST Computer Security Resource Center

MLPerf Tiny: Community benchmark for MCU/low-power inference performance..

Cloud-first bias: Shipping raw streams to the cloud “for now” creates permanent cost and latency debt.

Over-sized models: Big accuracy wins evaporate if heat throttling pushes p95 latency beyond control loop limits.

Update blind spots: Unmanaged field devices grow stale; adopt signed OTA with staged rollouts.

Security afterthought: No SBOM, default passwords, and flat networks are non-starters—especially in civic deployments.

Single-vendor lock-in: Favor open standards (OPC UA, ONNX) and portable build pipelines.

0–3 months (pilot):

Pick one use case (e.g., defect detection or a single intersection).

Baseline current KPIs (latency, accuracy, incidents, OEE).

Build an MVP pipeline: labeled data → small model → quantization → deploy to Edge AI gateway; instrument telemetry.

Stand up a two-node MEC (or operator MEC slice) and secure the path (mTLS, short certs).

4–9 months (scale-up):

Add canary rollouts, drift alarms, and blue/green model slots.

Integrate OPC UA/SCADA or traffic controllers.

Introduce A/B testing on thresholds and pre/post-processing.

Contractual SLOs: p95 latency, availability, patch windows.

10–18 months (program):

Expand to 3–5 use cases; share a common Edge AI platform (registry, OTA, observability).

Automate retraining; feed MEC-aggregated features back to cloud storage.

Vendor risk management; second source critical hardware.

Edge AI at the network perimeter delivers millisecond decisions without backhauling data.

MEC + 5G (URLLC) enable deterministic performance for city and industrial loops. ETSI+1

Use OPC UA for plant interoperability and NIST IR 8259A for device security baselines. OPC Foundation+1

Build an MLOps for edge pipeline with quantization, canary updates, and drift monitoring.

Start narrow, measure rigorously, and expand once payback is proven.

Q1. How is Edge AI different from edge analytics?

Edge analytics summarizes data at the edge; Edge AI performs inference (classification, detection, prediction) at the edge, enabling automated actions within tight latency budgets.

Q2. What’s a good latency target for closed-loop control?

Design to p95 < 50 ms for traffic/pedestrian scenarios; p95 < 10–20 ms for specific machine safety loops, depending on process dynamics and actuator constraints. 5G URLLC and MEC help achieve these targets. 3GPP

Q3. Which optimizations matter most for edge devices?

Quantization (INT8/FP8/FP4) and operator fusion usually yield the biggest wins; combine with structured pruning and distillation for compact, fast models.

Q4. How do I secure city cameras and gateways?

Adopt zero-trust (mTLS, cert rotation), enforce the NIST 8259A device baseline, and avoid raw video retention; export events/embeddings instead. NIST Computer Security Resource Center

Q5. How can I compare MCU-class inference options?

Use MLPerf Tiny results to benchmark frameworks and hardware under consistent workloads.

Edge AI moves intelligence to the network perimeter so IIoT and smart cities can act in real time, protect privacy, and curb bandwidth waste. With MEC placement, URLLC-grade transport, OPC UA interoperability, and an MLOps pipeline designed for constrained hardware, organizations can deliver measurable improvements—faster flows, safer streets, and more resilient plants. Start with one high-impact loop, quantify gains, then scale with a common Edge AI stack across sites.

Ready to pilot? We can help evaluate your workload, select the right gateway/MEC mix, and design a secure rollout plan for your environment.

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.