Move over chess and code completion. AI’s latest battleground is formal mathematics. And in this high-stakes intellectual arena, two heavyweights have emerged: DeepSeek-Prover-V2 and GPT-4.

While most large language models dazzle with essays, Python scripts, and clever banter, formal mathematics demands something else entirely—rigor, symbolic precision, and step-by-step logic with no margin for error. This isn’t autocomplete. It’s cognitive combat.

Formal mathematics has become a proving ground for the next generation of AI reasoning. In this article, we examine the head-to-head between DeepSeek-Prover vs GPT-4 through the lens of benchmarks like MiniF2F. We also explore what sets formal math apart, how architectural choices affect performance, and what all of this means for researchers, educators, and AI developers.

If you’re building with LLMs, testing their reasoning limits, or just fascinated by symbolic logic—this one’s for you.

When people hear “AI doing math,” they might picture a souped-up calculator. But formal mathematics is far more complex, and infinitely more interesting.

Formal math involves crafting structured proofs within rigid logic frameworks like Lean, Coq, or Isabelle. Each proof must follow exacting syntax and semantic rules, which means there’s no room for vague answers or creative interpretation. You’re not just solving problems—you’re proving them with the same meticulous rigor expected in academic mathematics.

This matters for a few reasons. Formal math underpins much of modern STEM—particularly in computer science, cryptography, and algorithm design. It also serves as a testbed for AI’s ability to transition from fuzzy statistical prediction to symbolic, verifiable reasoning.

GPT-4 may be able to rhyme couplets or refactor JavaScript, but can it derive a theorem from axioms without skipping steps or making false inferences? That’s the gold standard of reasoning. And it’s why formal math is increasingly viewed as the ultimate benchmark for machine intelligence.

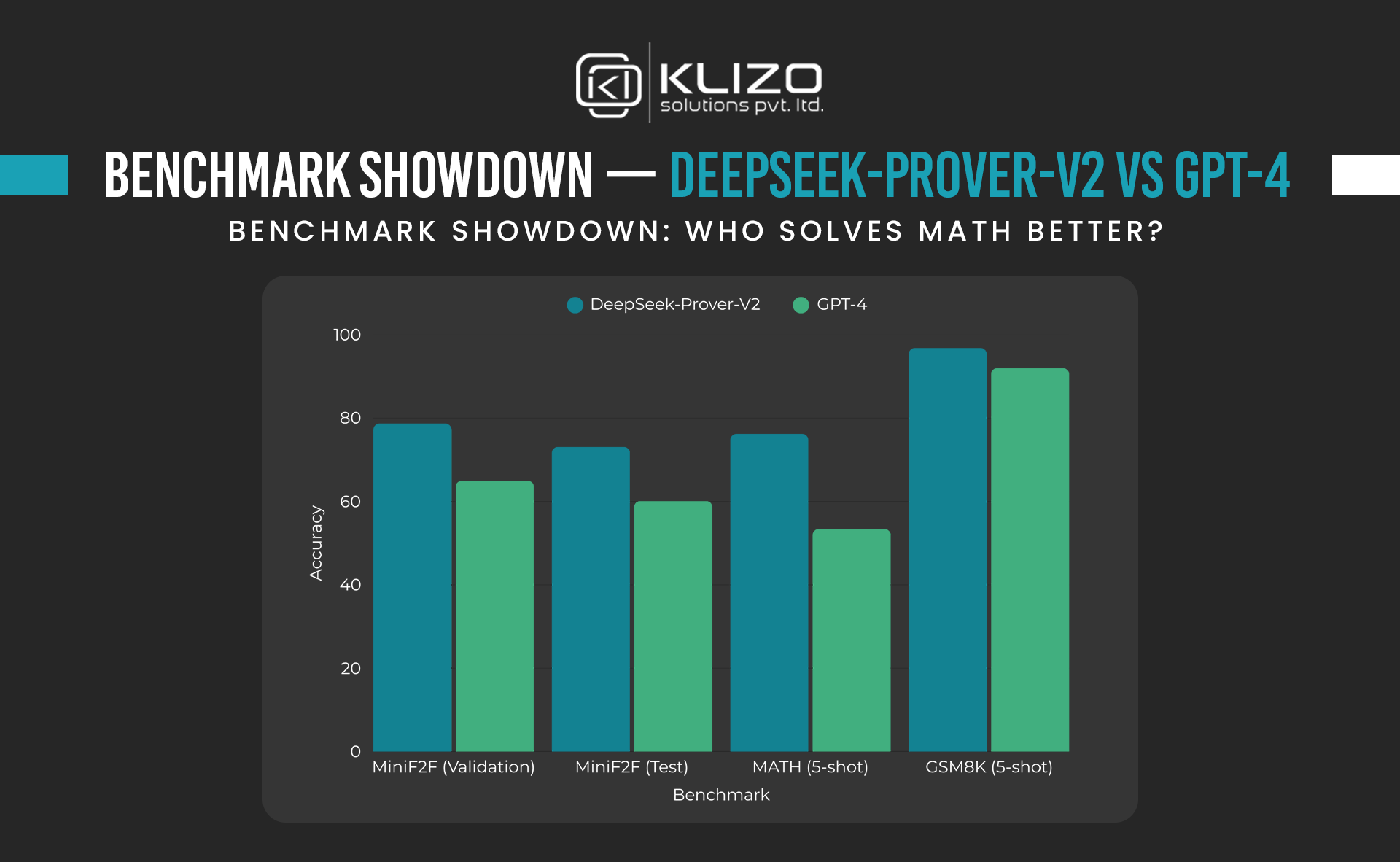

To understand how AI models stack up in formal reasoning, researchers use benchmarks designed to isolate different aspects of mathematical competence:

GSM8K: A collection of grade-school math problems that test chain-of-thought reasoning and arithmetic precision.

The gap is significant. DeepSeek-Prover-V2 dominates in formal math, particularly on MiniF2F—the toughest of the bunch. These problems require multi-step reasoning using symbolic logic, not just number-crunching or pattern recognition.

What makes MiniF2F especially demanding is its basis in Lean. Models must generate proofs that are both logically correct and syntactically valid in a formal system. That’s an area where DeepSeek-Prover-V2 thrives, thanks to its architecture and domain-specific training.

GPT-4, for all its power in general reasoning and language tasks, falters in formal domains. It still performs admirably on GSM8K and MATH, but its performance drops sharply in tasks requiring strict logical formality.

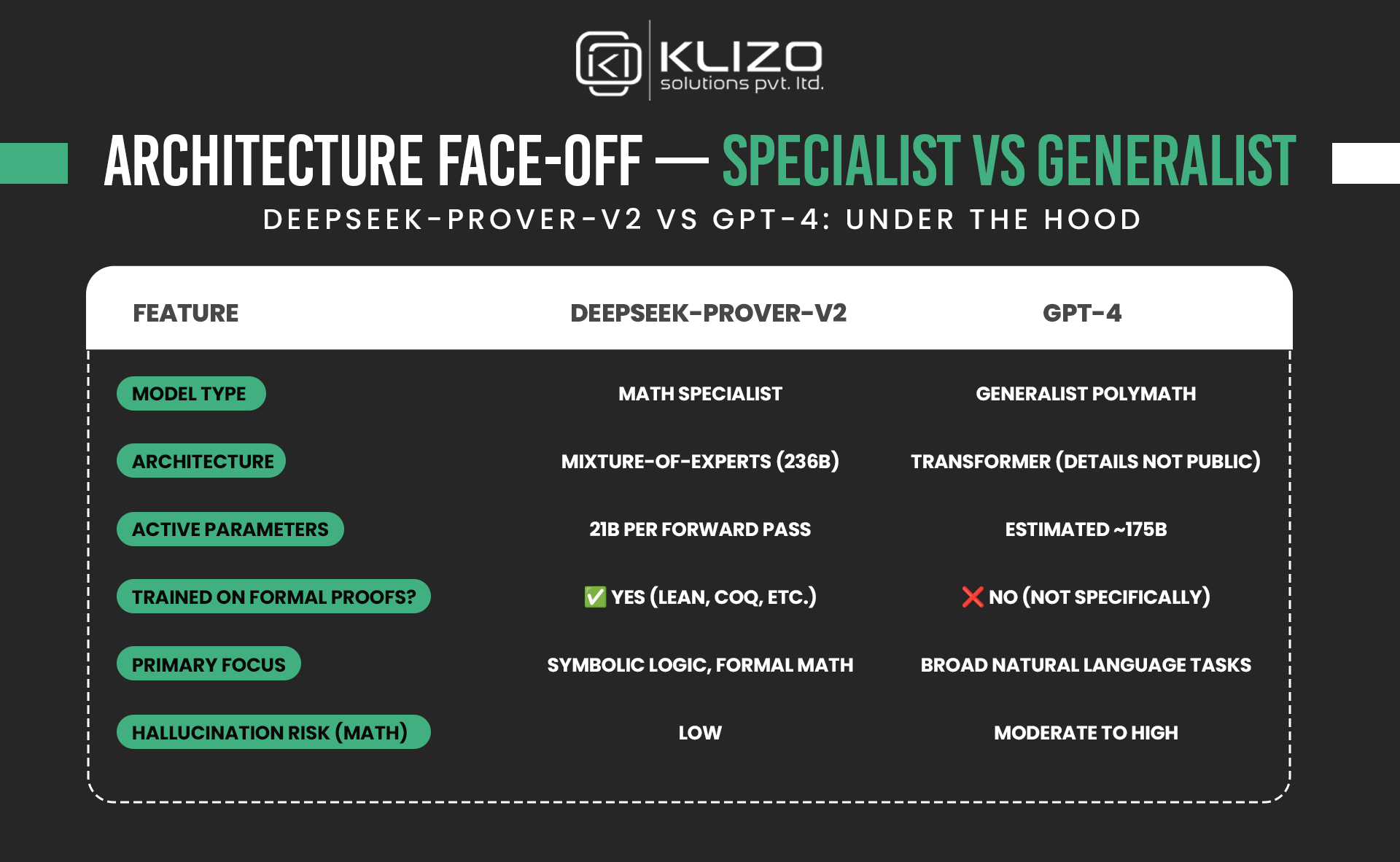

GPT-4 is a generalist—a polymath designed to tackle everything from translation and summarization to coding and conversation. It is built for versatility, not specialization. Formal math is just one of many tasks it can perform.

DeepSeek-Prover-V2 is the opposite. It is a specialist, engineered for symbolic logic. It uses a Mixture-of-Experts (MoE) architecture with 236 billion parameters overall, but activates only 21 billion per forward pass—offering efficient specialization through modular expert layers.

The key advantage? DeepSeek’s training set includes Lean proofs and formal math corpora, which sharpen its ability to produce step-by-step symbolic reasoning that aligns with the constraints of formal logic systems.

While GPT-4 can emulate reasoning and simulate steps in natural language, DeepSeek is optimized to do it by the book—literally, the book of logic.

This highlights a broader tension in the LLM space: should we build one model to rule them all, or multiple models finely tuned for specific domains? In math, specialization currently wins—and the DeepSeek-Prover vs GPT-4 rivalry makes that clear.

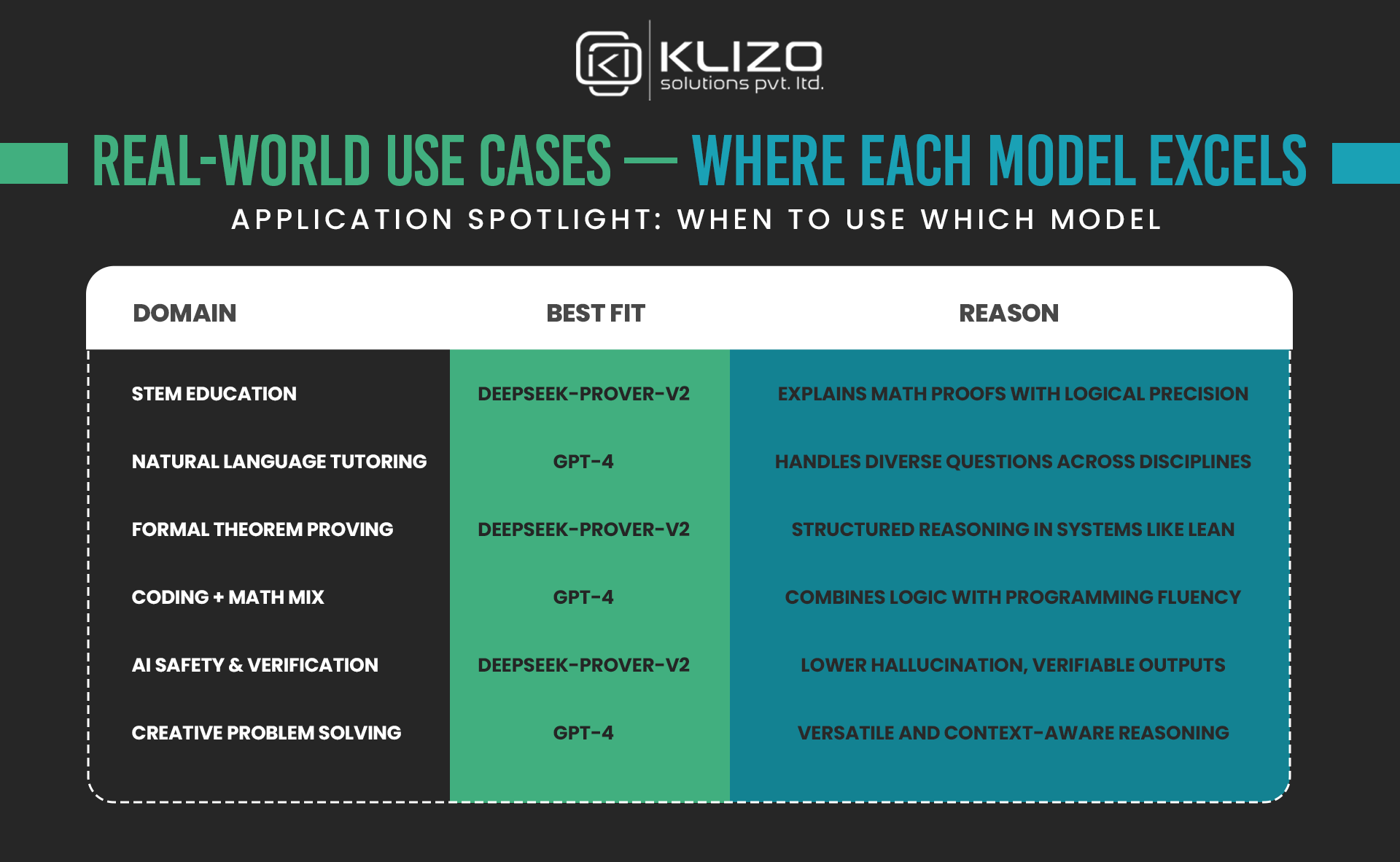

This is more than a scorecard comparison. It speaks to the future of AI’s role in science, education, and software verification.

That said, no model is flawless. DeepSeek-Prover-V2 may overfit to certain formal structures or struggle with problems outside Lean-style environments. GPT-4, while broadly capable, may hallucinate or lose logical precision on longer proofs.

This split reflects a growing architectural debate in AI: generalist vs specialist. The future may involve hybrid systems—GPT-like models handling context and user intent, and DeepSeek-like models executing formal logic underneath. That’s one more reason the DeepSeek-Prover vs GPT-4 competition deserves attention.

So who rules formal math right now? By benchmark performance and design, DeepSeek-Prover-V2 is clearly ahead in the DeepSeek-Prover vs GPT-4 debate.

Its architecture, training focus, and symbolic fluency make it the top choice for formal math tasks. It is not a general chatbot—it is a purpose-built proof engine. GPT-4, while trailing in this domain, remains a crucial generalist capable of integrating reasoning with rich language, multimodal prompts, and broader AI workflows.

As we anticipate DeepSeek-Prover-V3 and GPT-4, the lines may blur. Future systems could combine formal rigor with contextual flexibility, ushering in new capabilities in scientific computing, STEM education, and even AI safety.

Formal math is more than a niche—it is the frontier. The AI that conquers it may not just assist humans in reasoning; it may eventually become a partner in the act of discovery.

For more insights on LLM evolution, benchmark analysis, and real-world AI applications, check out our other deep dives on klizos.com/blog.

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.