If the past decade of software development was defined by cloud adoption, the next decade will be defined by intelligent autonomy. Artificial intelligence has moved far beyond chat interfaces and text generators. The models emerging in 2024 and 2025 are demonstrating something fundamentally different: persistent reasoning, contextual memory, tool use, self-correction, environment awareness, and multi-step execution. These are not traits of utilities. These are traits of operating systems.

By 2026, AI agents will no longer sit at the edges of applications as optional features. They will sit at the center of the software ecosystem, orchestrating workflows, making decisions, and managing interactions the way operating systems manage processes today. Developers are not just integrating AI into applications anymore. They are building applications inside ecosystems run by AI. In other words, intelligence becomes the runtime environment.

This is not hype. It is the natural evolution of a pattern that started the moment large language models began using tools. And developers who understand what this transformation means will be the ones shaping the technology landscape of the next decade.

When ChatGPT first appeared, developers saw it as a new interface for text generation. It produced paragraphs, summaries, and code snippets. But the breakthrough came when models were taught to interact with tools and APIs. A model that can query a database, run a shell command, or retrieve documents stops being a text generator and starts becoming a decision maker. As these capabilities improved, the line between “tool user” and “autonomous agent” blurred.

By 2025, nearly every major model had an agentic version. Google’s Gemini Ultra 2 demonstrated autonomous planning. GPT-4.1 and 4.2 demonstrated multi-turn self-correction. Claude 3.5 established a new level of contextual stability. Meta’s Llama 3.1 introduced memory-based agent behavior. Open-source frameworks like LangChain, CrewAI, and AutoGen gave developers the scaffolding to build agent teams capable of collaborating and negotiating tasks.

This shift was not accidental. It reflected a growing recognition within the industry that interaction is less important than autonomy. Developers no longer wanted models that could answer questions. They wanted models that could take responsibility for outcomes.

Once models learned to chain thoughts, select tools, and manage tasks, they began to resemble the most important component of a computer: an operating environment that knows how to schedule work.

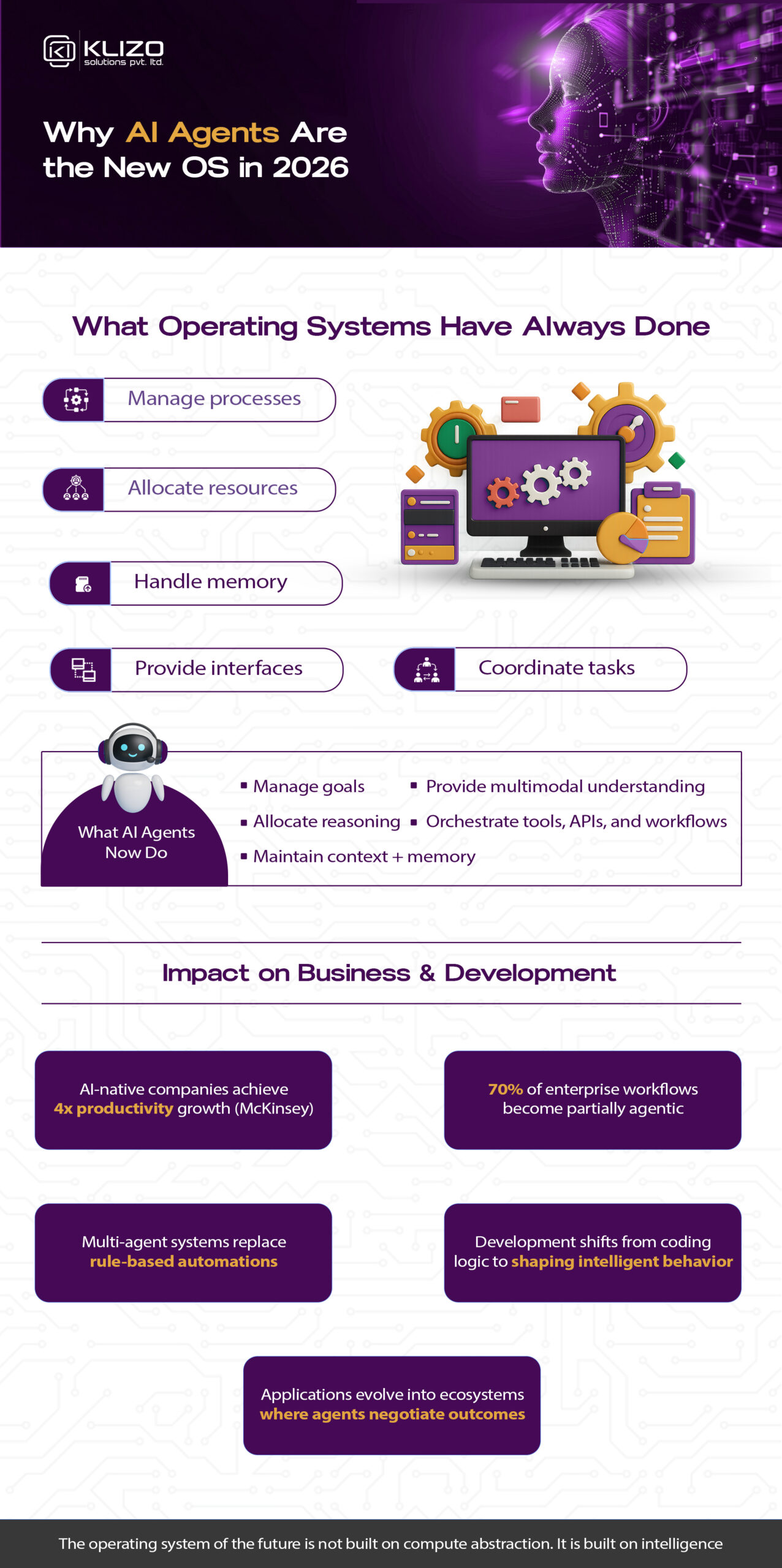

To understand why AI agents are turning into operating systems, we must examine the role an OS traditionally plays. Operating systems coordinate processes, allocate resources, manage memory, control execution, and provide an interface between users and hardware. In many ways, the OS is the silent orchestrator beneath everything the user interacts with.

AI agents are increasingly performing these same orchestration tasks but at the cognitive level rather than the hardware level. Instead of allocating CPU time, they allocate reasoning time. Instead of managing memory bits, they manage context windows and long-term knowledge bases. Instead of scheduling threads, they schedule goals, subgoals, and execution steps. Instead of launching programs, they launch tools, APIs, workflows, and microservices.

This is why analysts across the industry began calling the emerging paradigm an “AI Operating System.” Although agents do not manage hardware, they manage everything above hardware. Applications no longer run because a user clicks a button. They run because an intelligent agent chooses the sequence of actions, interprets the state, retrieves the needed information, and decides what makes sense next.

The OS abstraction is shifting upward from the machine level to the intelligence level.

A modern AI-native system contains several layers that mirror classic OS architectures in surprisingly direct ways. At the foundation sits the reasoning engine, the core LLM or multimodal model that provides the system’s deliberation, planning, and inference capabilities. This is analogous to the kernel. It interprets intent, manages internal logic, and controls the flow of tasks.

Above the core model lies the retrieval layer. This is where the modern RAG (Retrieval Augmented Generation) ecosystem lives. But RAG has evolved. It is no longer simply embedding text into a vector store. RAG systems now blend semantic search, graph search, structured pipelines, hybrid scoring, and multimodal indexing. The retrieval layer is the memory manager of the AI OS. It feeds the model relevant knowledge without overwhelming it.

Then we move into the tool execution layer. This consists of everything the agent can use: APIs, microservices, browsers, webhooks, internal services, CLIs, cloud functions, databases, and code execution environments. The AI OS dynamically selects these tools based on its interpretation of the user’s goal. This is the system’s process execution layer.

Beyond tool use lies the orchestration layer. This is where multi-agent intelligence lives. Instead of a single model processing everything, systems in 2026 increasingly delegate responsibilities to specialized agents: planners, validators, critics, compliance auditors, research assistants, data synthesizers, and UI generators. These agents communicate with each other, negotiate tasks, and monitor each other’s output. They behave like distributed services inside a microservice architecture.

Above orchestration sits the interaction layer. This includes chat interfaces, dashboards, plugins, voice interfaces, and application APIs that humans and other systems use to communicate with the AI OS. Finally, everything is wrapped inside a governance layer that enforces permissions, ethical constraints, safety rules, and corporate policies.

By the time all these layers are combined, the architecture looks indistinguishable from an operating system. The only difference is that instead of orchestrating processors and memory blocks, it orchestrates intelligence and purpose.

Developers now face a paradigm shift similar to the movement from procedural to object-oriented programming, or from on-prem servers to cloud-native architectures. AI-native software development requires new thinking about what is coded, what is delegated, and what is controlled through policy rather than logic.

Instead of writing every line of logic explicitly, developers define the boundaries within which intelligent agents operate. Instead of planning every UI step, developers describe desired outcomes and constraints. Instead of building rigid workflows, they allow workflows to emerge from dynamic reasoning.

This changes how software is designed at a foundational level. A traditional application is deterministic. An AI-native application is probabilistic but controlled. Its behavior cannot be fully predicted, but it can be guided, constrained, audited, and refined.

For developers, this means shifting from instruction authorship to intelligence orchestration. You do not tell the agent exactly how to act. You define the rules that shape how it will decide to act.

This is why Agent Oriented Programming (AOP) is becoming a legitimate discipline. The developer’s role becomes more conceptual and more architectural. You define roles, goals, boundaries, memory schemas, retrieval strategies, and safety policies. The agent determines the sequence of actions.

Traditional software relies on fixed workflows: step 1 follows step 0, step 2 follows step 1. These workflows break easily when unexpected conditions arise. Agents do not rely on linear workflows. They rely on planning.

If an agent encounters missing information, it retrieves it.

If a tool call fails, it retries or switches strategies.

If a dataset is ambiguous, it asks for clarification.

If a task is too large, it decomposes it.

If an action produces a poor result, it self-corrects.

This adaptability is not something developers can manually program. It emerges from the agent’s reasoning capabilities, long-memory context, and multi-agent collaboration. As a result, systems built on agentic foundations become more resilient than rule-based workflows.

This explains why enterprise adoption of autonomous AI surged dramatically, with Gartner reporting more than 240 percent growth in agent-based automation across sectors. The trend is accelerating into 2026 as organizations realize that the only scalable way to manage complexity is through systems that can adapt to it.

In 2026, developers do not build step-by-step task logic. They build the tools that agents use to accomplish goals. This includes API endpoints, data interfaces, execution environments, cloud functions, and guardrails. Developers become the architects of possibility rather than enforcers of prescribed logic.

Instead of scripting processes, developers structure domains. Instead of defining workloads, they define capabilities. Instead of specifying how to do something, they specify what the system is allowed to do and what constraints govern it.

This drastically reduces development time while increasing the intelligence of applications. It also changes how debugging works. Instead of debugging code flows, developers debug agent behavior, reviewing logs that show not only errors but decision paths.

The modern AI agent OS relies heavily on knowledge retrieval. Models cannot rely solely on their training data. They must be connected to internal business knowledge, documentation, user history, product databases, and regulatory repositories. This is why RAG evolved into RAG 2.0: a collection of hybrid retrieval strategies that manage structured and unstructured data, graphs, contexts, embeddings, and real-time query processing.

Retrieval frameworks are becoming the memory basement of autonomous systems. Developers who master RAG architectures will build more accurate, controllable agents. This is a core skill for 2026 and beyond.

Companies that adapt early to AI agents will outperform those that cling to traditional workflows. McKinsey predicts a 4 to 1 productivity gap between AI-native companies and non-AI companies by 2027. The economic implications are enormous.

An AI OS does not simply speed up tasks. It transforms how tasks are done. It reduces operational friction. It eliminates repetitive manual overhead. It enables always-on intelligence embedded into daily processes. In fields like HR, finance, customer support, logistics, and compliance, the shift is already visible.

One example is the evolution of HR automation. Systems like our InterviewScreener platform already use AI to parse resumes, analyze candidate responses, and generate structured reports. By 2026, these systems will become fully autonomous, managing entire hiring pipelines. You can read more about automated pipeline improvements in related articles on KlizoSolutions.

For readers who want to explore the foundations of autonomous systems, several reputable sources offer excellent technical analysis:

Google AI Blog: https://blog.google

OpenAI Research: https://openai.com/research

Anthropic Model Reports: https://www.anthropic.com/news

These provide deeper insights into agentic architectures, memory models, and emerging AI OS frameworks.

The most important change happening in software development is that intelligence is becoming the environment itself. AI is not a tool inside software. It is becoming the layer that runs software. In the same way that operating systems abstract away hardware complexity, AI agents abstract away task complexity.

Developers who understand this shift will design systems that are more adaptable, more resilient, and more powerful than anything possible in traditional architectures. Developers who ignore it will be building on patterns that will feel outdated as early as 2026.

We are entering the era where applications no longer instruct machines. They negotiate with intelligence. And the developers who master this new operating paradigm will define the future of technology.

Previous article

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.