The rapid acceleration of open-source large language models (LLMs) in 2024–2025 has redefined how organizations build GenAI solutions. As more developers opt for self-hosted, open-source alternatives to proprietary APIs, choosing the right LLM becomes critical – especially for complex use cases like Retrieval-Augmented Generation (RAG), domain-specific finetuning, and internal copilots.

In this showdown, we compare three of the most prominent contenders in the open-source LLM space:

We’ll evaluate them across key dimensions – RAG performance, ease of finetuning, self-hosting viability, and production-grade readiness – to help you decide which LLM best suits your GenAI workloads.

Highlights: Best-in-class for RAG benchmarks; native support for tool-calling

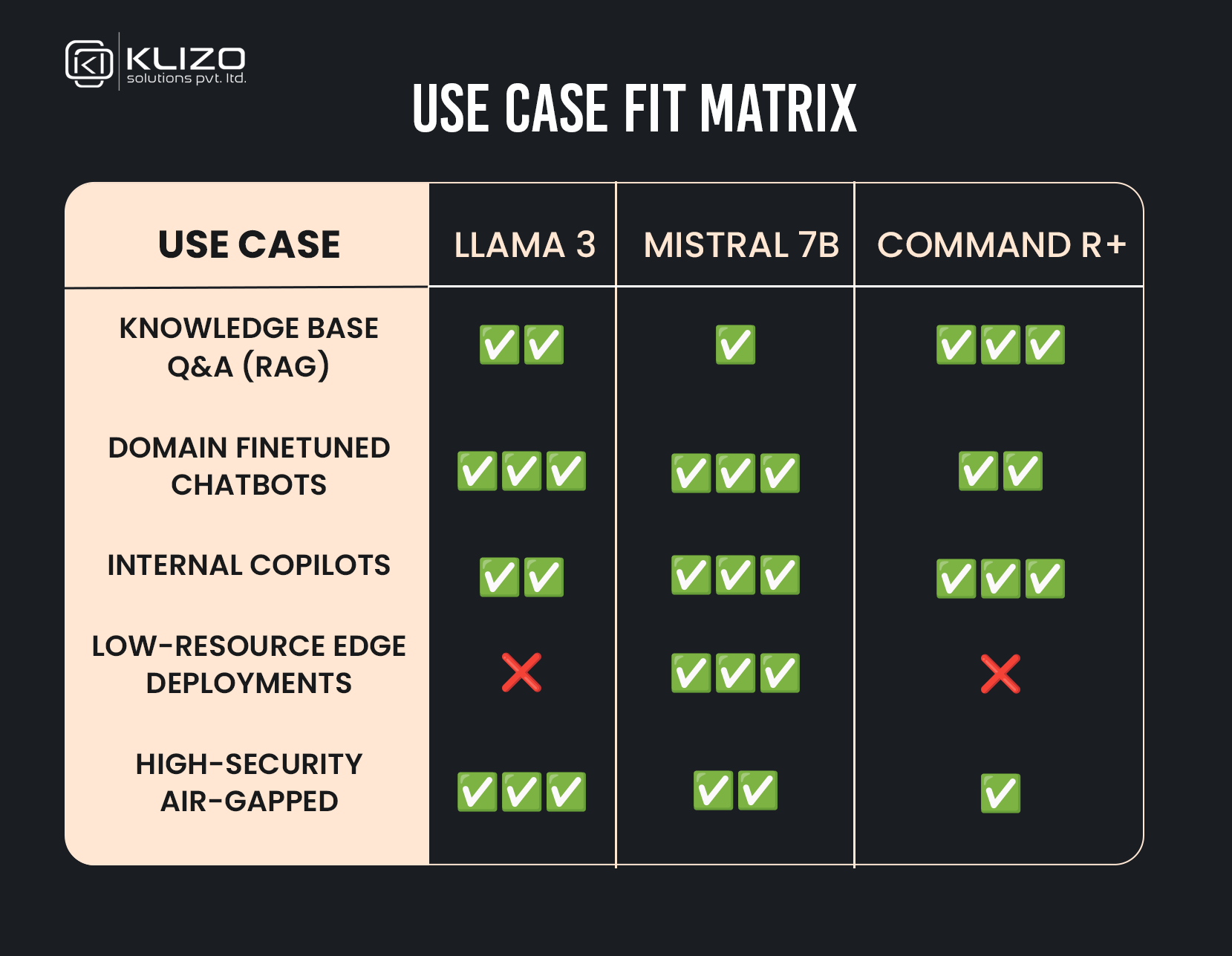

Each of these models excels in specific domains, but here’s how we recommend choosing:

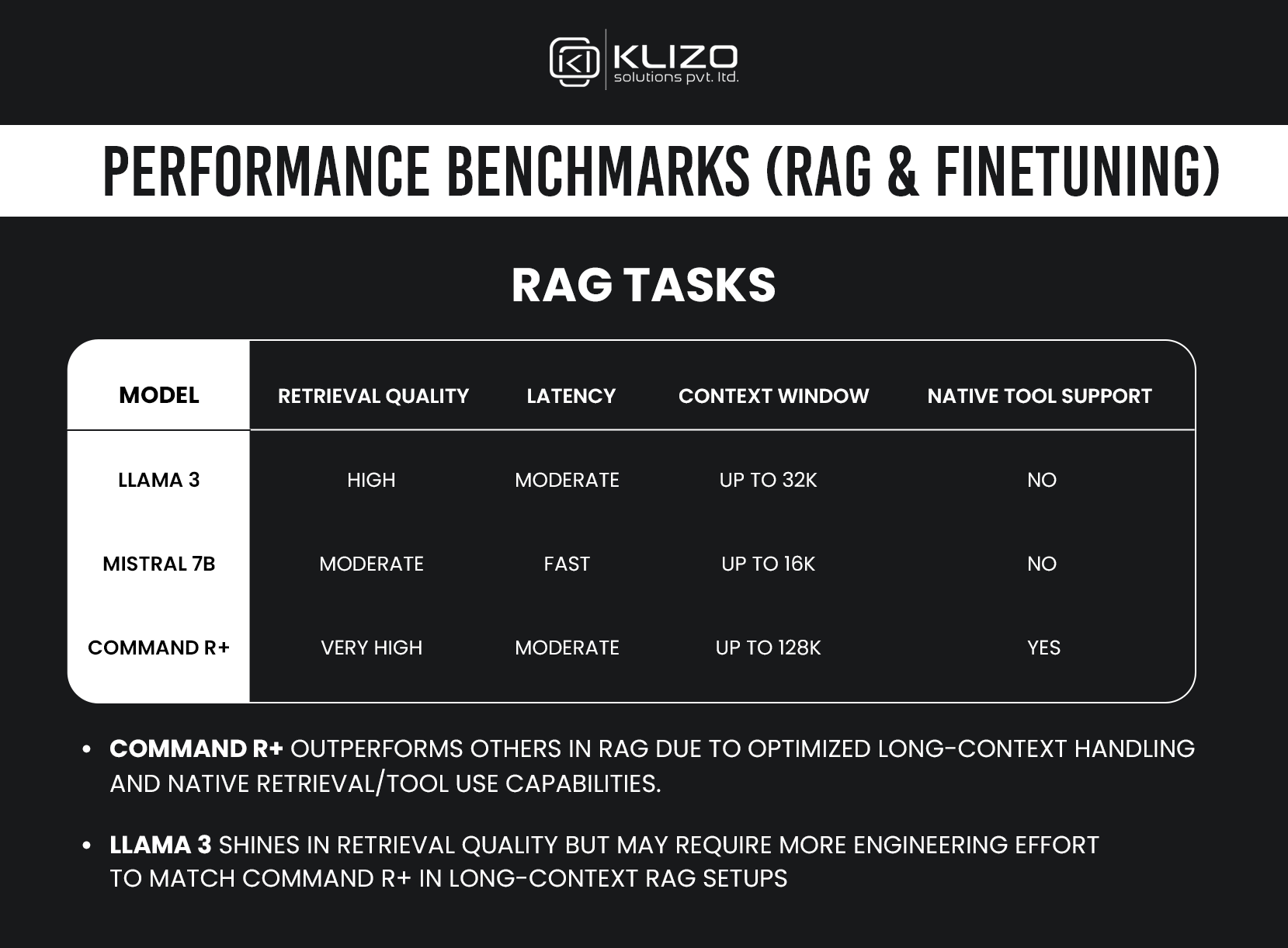

✅ Winner: Command R+ – Long context window, tool integration, and RAG-native design

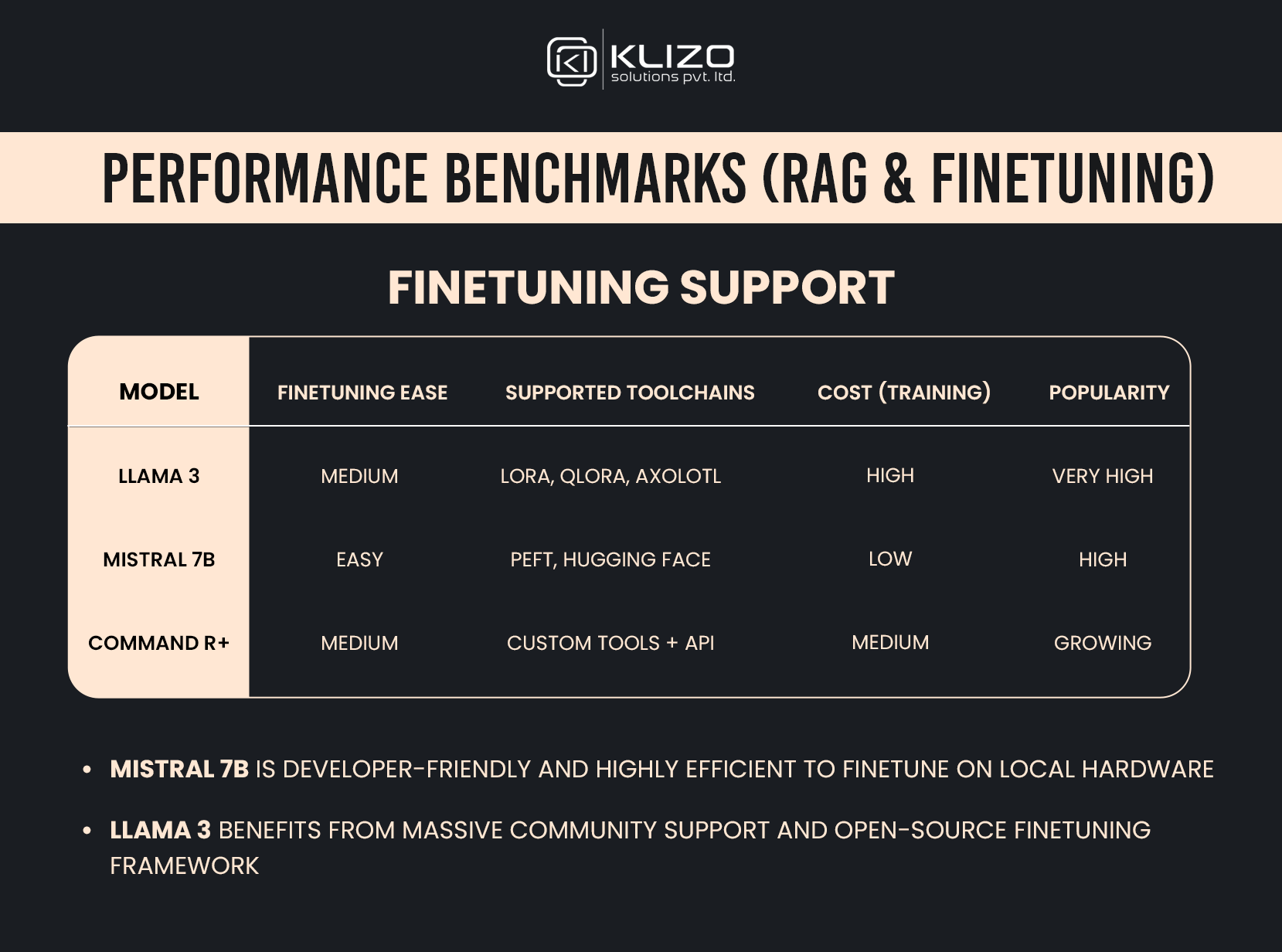

✅ Winner: Mistral 7B – Easiest to finetune, quantize, and deploy on modest GPUs

✅ Winner: LLaMA 3 (8B) – Broad ecosystem, community tools, and finetuning flexibility

The open-source LLM space is heating up, and with it comes the opportunity to build more tailored, cost-effective, and controllable GenAI solutions. Whether you’re experimenting with finetuning, rolling out a RAG-powered chatbot, or deploying in air-gapped environments, the right model makes all the difference.

LLaMA 3, Mistral 7B, and Command R+ each bring something unique to the table:

Command R+ stands out for RAG-native performance and long-context reasoning.

Mistral 7B is ideal for lean deployments and fast iteration cycles.

LLaMA 3 remains a powerhouse backed by a thriving ecosystem and versatile tooling.

Still unsure which LLM fits your use case? At Klizo, we help teams architect, finetune, and deploy AI solutions that deliver real-world value.

Reach out to us here to explore what’s possible with open-source GenAI.

Joey Ricard

Klizo Solutions was founded by Joseph Ricard, a serial entrepreneur from America who has spent over ten years working in India, developing innovative tech solutions, building good teams, and admirable processes. And today, he has a team of over 50 super-talented people with him and various high-level technologies developed in multiple frameworks to his credit.

Subscribe to our newsletter to get the latest tech updates.